At Adobe MAX, Adobe added a new video model to its Firefly line of generative AI models, with Text- and Image-to-Video capabilities, and showed new AI functionality across Creative Cloud.

Adobe Firefly Video Model, text to video

At the Adobe MAX 2024 conference held 14 to 16 October, Adobe announced another new model now available in its Firefly line-up of generative AI models, which up to now has included Image, Vector and Design models. The new Firefly Video Model is now in limited public beta, during which initial feedback will be collected from professional users that will be used to continue refining and improving the model.

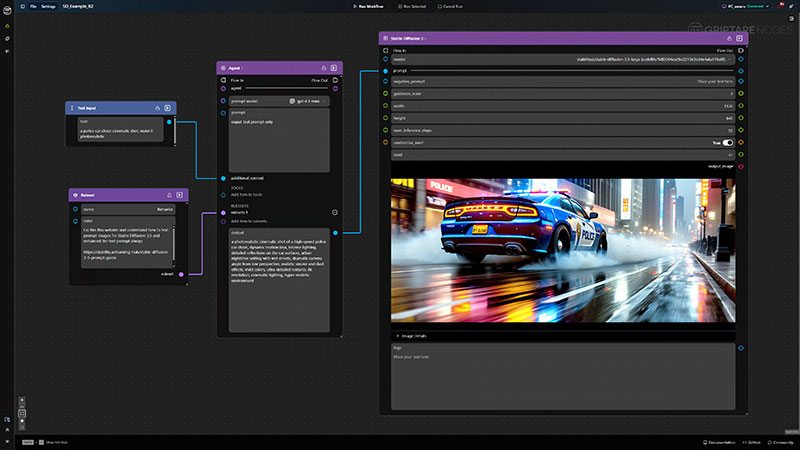

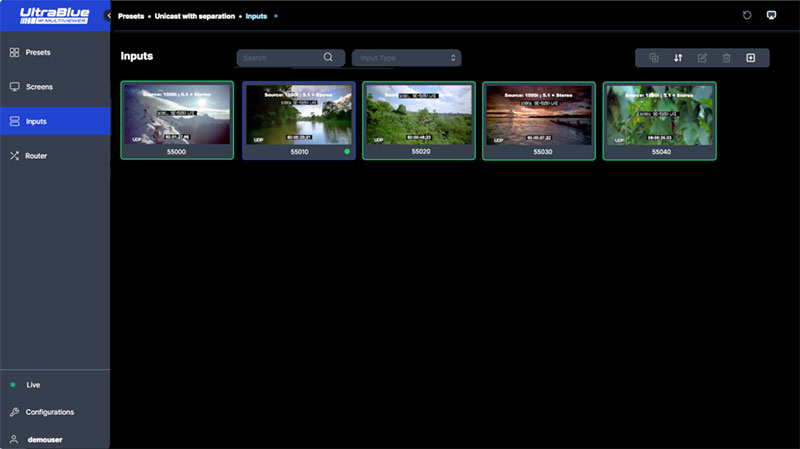

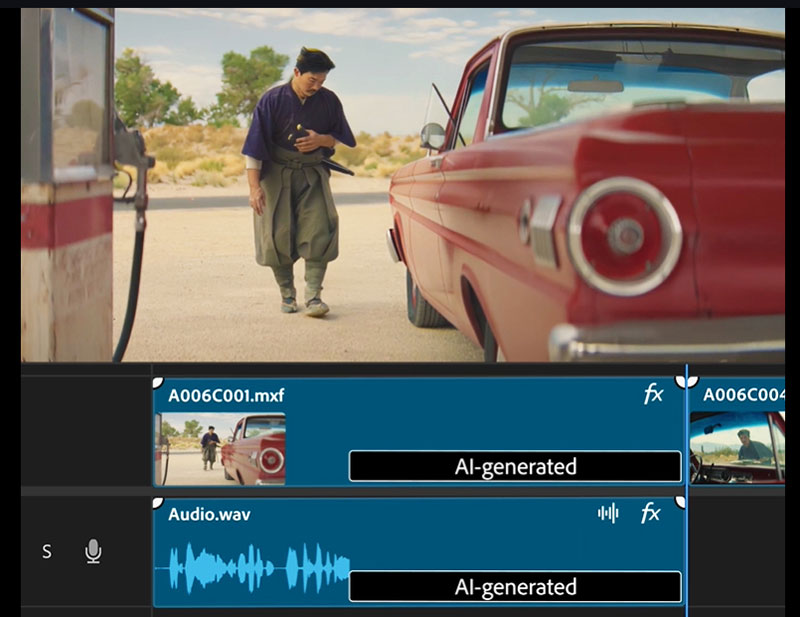

Running on the Firefly Video Model, users can access new Text to Video and Image to Video capabilities, also available in limited public beta through the Firefly web app. With Text to Video, editors can generate video from text prompts, and access camera controls such as angle, motion and zoom to fine tune videos. With Image to Video, artists can transform still shots or illustrations into live action clips, for example, referencing images for B-Roll generation that fill gaps in a video timeline.

Within a year of its launch, the Firefly models’ functionality has been integrated into Photoshop, Express, Illustrator, Substance 3D and other Adobe software, and supports various workflows in Creative Cloud applications. The models respond to text prompts in over 100 languages, and are also starting to work faster.

Image to video

A new version of Firefly Image 3 Model is also released, capable of generating series of images for project development in a few seconds with results that are several times faster than previous models. Like Text to Video/Image to Video, it is available now on the Firefly web app.

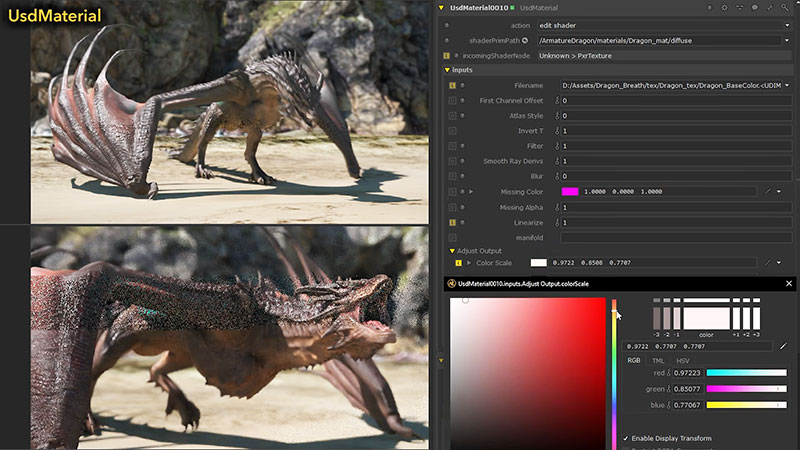

Premiere Pro

Following the development of the Firefly Video Model, Premiere Pro now has a set of AI-powered video editing workflows, along with enhancements and upgrades to the app’s performance and user experience, announced in September.

New Firefly-powered features include Generative Extend (beta), which addresses the complex editing tasks required when edits need to last a precise length of time. Generative Extend was designed to make shots slightly longer by generating entirely new frames at the beginning or end of a clip. Editors can create new media from an existing clip to help fine-tune edits, hold on a shot for an extra beat or add a few frames of b-roll to smooth out a transition or cover a gap. Eye-lines or actions that shift unexpectedly mid-shot can sometimes be corrected with extra frames as well.

Generative Extend in Premiere Pro

The Generative Extend tool (beta) can also be used to extend audio clips to create ambient noise or ‘room tone’ to smooth out audio edits. Clicking and dragging the end of a dialogue clip will extend the ambient sounds underneath, or extend sound effects that cut off too early.

Photoshop and Illustrator

New developments in Photoshop include Distraction Removal smart features for the Remove Tool to help remove unwanted objects including people, wires and cables. A new Adobe Substance 3D Viewer app (beta) now integrates with the Photoshop beta app, making it possible for graphic designers to view and edit 3D editable Smart Objects directly across their 2D designs in Photoshop.

To help with colour management and image quality, designers can use OCIO Configuration to control, convert and preserve colour workflows at higher quality across programs, and 32-bit Workflow for HDR increases detail and colour precision.

Removing distractions

In Illustrator, the Project Neo web app (beta), first shown at MAX 2023, is used to create and edit 3D designs, complete with colour, shape, lighting and perspective, which designers can bring into Illustrator to use in design workflows. Objects on Path is a new tool for quickly attaching and precisely arranging and moving objects along most types of path, and Enhanced Image Trace will accurately convert graphics to vectors, ready for further editing and customisation across design workflows.

More AI Applications

Adobe also previewed Project Concept, new tools for collaborative creative concept development that focusses on remixing images in real time. Remixing here is about using Adobe’s Firefly Generative AI models to help users explore potential artistic directions, combine images, transform regions of an asset, and remix styles, backgrounds and other assets.

Users can start with their own existing assets or use external sources. Project Concept then uses Adobe’s AI models and collaborative tools to assist with both ‘divergent thinking’ - that is, extending the possibilities of a creative project – and ‘convergent thinking’ to bring ideas together. The process of applying Project Concept’s controls then helps lead users to the final direction.

Text to Video

Adobe says they recognise that the prompt-based approach to generative AI requires users to have a clear idea of a desired result, before they actually know what that should be. This can limit users at the exploration stage of a project. Instead, Project Concept is optimized for remixing images in real-time, capturing a potentially useful scene or object or idea and applying it to others, and using controls such as style and structure reference to achieve a goal.

Finally, in Firefly Services, a collection of creative and generative APIs for enterprises, Adobe showed new ways to scale production workflows. This includes Dubbing and Lip Sync now in beta, which uses generative AI for video content to translate spoken dialogue into different languages, while maintaining the sound of the original voice with matching lip sync.

Bulk Create, Powered by Firefly Services is now in beta as well, for use in efficiently editing large volumes of images by taking over such tasks as resizing or background removal.

Image to video, in Premiere Pro

About Being Commercially Safe

According to Adobe, the Firefly Video Model is the first publicly available video model designed to be commercially safe. By commercially safe, Adobe refers to its policy of training its Firefly generative AI models on licensed content, such as Adobe Stock and public domain content. Adobe’s AI features undergo an AI Ethics Impact Assessment showing that they meet the company’s own AI Ethics principles of accountability, responsibility and transparency, mainly concerned with intellectual property rights and diverse assessment of AI features to avoid bias.

Content Credentials, intended to show how digital content was created and edited, are applied to the outputs of certain Firefly-powered features – including the new ones enabled by the Firefly Video Model – to indicate that generative AI has been used in its creation.

The Firefly Video Model is in limited public beta on firefly.adobe.com. During the beta, generations are free. More information about Firefly video generation offers and pricing will be available when the Firefly Video Model moves out of beta. www.adobe.com