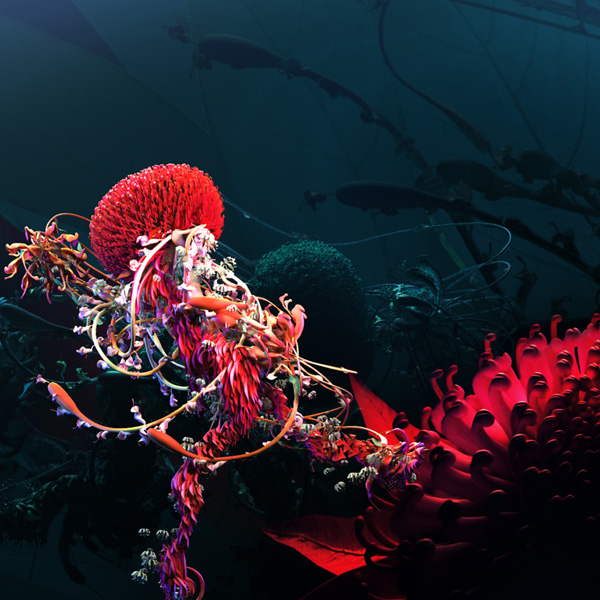

BEMO animation and VFX studio in Los Angeles created the animation for the annual ‘Lighting of the Sails’, a projection mapping production displayed on Sydney Opera House, situated at the centre of the 2019 VIVID Festival in Sydney, which took place 24 May to 15 June 2019. Titled ‘Austral Flora Ballet’, the project uses contemporary dance moves based on motion capture to animate Australia’s distinctive plant life in a vibrant, original style.

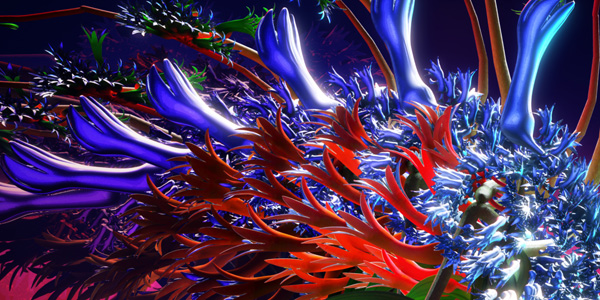

Five species from New South Wales perform - the Waratah, Kangaroo Paws, Hakeas, Eucalyptus flowers and Red Beard Orchids – and served as inspiration for the project’s character design, colour and movement. Aboriginal Australians identify with these plants as well, which play a role in their stories, dances and understanding of their land.

Flowers in Motion

The team at BEMO worked with director Andrew Thomas Huang and experience production company Collider Studios Lunar Division. The artists were led by design and technical director Brandon Parvini and executive producer Brandon Hirzel, and spent three months building and animating the flower characters. The result was 12 minutes of animation that became large-scale projection mapping content for the Opera House - during the annual ‘Lighting of the Sails’ at VIVID – and was projected every night of the festival on a continuous loop.

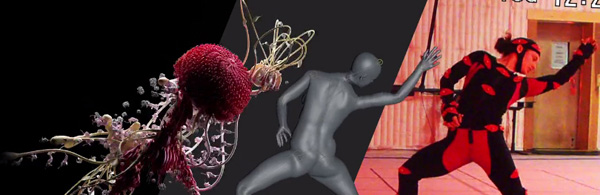

The animation team was asked to study human bodies in motion and integrate them with a floral theme. Part of the brief was expressing connections between architecture, nature and human motion. With those ideas in mind, BEMO established the foundation of the character design. “It was important to be able to recognise the dancer within each of our characters to give the audience an identifiable frame of reference. Otherwise, they would look too abstract and viewers wouldn't understand the intention of the movements,” Brandon said.

Using motion capture as a base seemed like the best way to give the flowers a dancer’s identity. At Rouge Motion Capture stage in Los Angeles, Bemo supervised a motion capture session in front of projected images of the Sydney Opera House to capture the movements of dancer Genna Moroni and choreography by Toogie Barcelo. Once the FBX files were obtained from the mocap session, BEMO had an animated, generic female form that matched the rough proportions of the dancer, and from that point developed the set of individual characters.

Staying Nimble

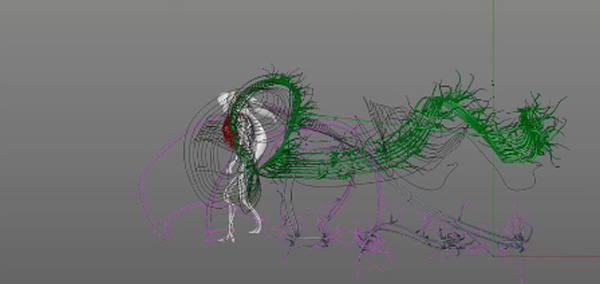

“A modular approach to the animation and character design was adopted both for practical and for artistic reasons, mainly to do with speed, continuity and increasing our options,” Brandon said. “The motion capture with Genna was done very early in the schedule. Right after that, we needed to devote time to finalising one character for the pitch, while leaving the tools open for revisions. Also, at that stage we had lots of ideas but weren’t ready to make final decisions.

“Modularisation and procedural animation seemed like the best way to leave the options open longer, but still keep a lot of control. We worked in MAXON Cinema 4D, which gives you a chance to experiment and design in an incremental manner, building characters that were extremely durable, stable and relatively light. Although a 12-minute piece of content requires a solid pipeline and a lot of render power, we stayed nimble in the process, trying alternative looks and iterating quickly to strike the right balance for each character in the piece.”

With these factors in mind, they modelled and rigged small, detached elements of the flowers, and left every element accessible – petals, buds, leaves, stamens and so on. “Likewise, for the animation we selected tiny segments from the motion capture – long or short gestures of an arm or leg, a twirl, light and heavy moves – and then used them later as components for the performances, according to the personality of the character we were animating,” he said.

Alembic Workflow

The elements and moves were made using the hair dynamics, constraints and rigging tools in the MoGraph toolset in Cinema 4D. “Our rigs were actually very complex, leaving them open to instability during rendering. Although all the motion was based on motion capture, the final performances are only about 10 to 15 percent mocap, used for the core motions, and the rest relies on keyframed secondary animation reacting to that. So, another technique that made the modular approach work was made possible by the new Alembic system workflow in Release 20 of Cinema 4D.”

This new workflow meant they could bake pieces of finalised animation into their rigging system and use them in the performances of specific flowers, which increased the stability of the character. “The Alembic files turned out to be especially important to our workflow because they can handle splines, hair, particles, colour, vertex maps and so on,” Brandon said.

“In Cinema 4D you are mainly using meshes to build characters. Then, once you have adjusted your rig to the character, you drag the mesh onto the Character object to weight and bind the mesh to the rig. We kept adding the baked simulations to the tendrils and leaves, and at the same time made the whole performance continuous, calling for a very stable rig to make sure the render could complete with no unexpected results.”

Animated Particles

“Some animation scene elements were attached to the physical skin of the character, or were attached to the bones system,” said Brandon. “I usually just use particles as particles but here, by putting them inside the dancer’s skin we could create an inner structure that worked as motivation for what her skin was doing. That gave us an editable core, and a lot more options for animation.

"For example, we would use X-Particles to help create a root serving as a leg or flowers as torsos with different arms coming off of that, grab the underlying splines and mesh and bake it. Or by using the deformers in Cinema 4D we could project our generated forms to the rigged mesh or attach it via a tracer system referencing the original bone structure.”

In the end, BEMO had created five dance sequences, each about four minutes long. They generated close to 30,000 frames of 4K footage rendered over several systems running for weeks, using Cinema 4D with both the Octane and Redshift renderers. “We used each renderer at different times. Octane was useful during the style frame stage and also suited the animators, whose experience was mainly with Octane. Redshift, on the other hand, was used to render the transition scenes when the stamens and tendrils covered the opera house. For those scenes, the geometry was too heavy for Octane to handle it properly and resulted in slower, unreliable renders. Redshift was used here to increase speed and stability," Brandon said.

“Optimising render times generally, whenever possible, was an important part of our approach. For instance, huge numbers of tendrils from the Red Orchid character completely cover the sails at one point, and then transition to a fresh scene. These image files were changed from .png to .tif files to speed up the render.”

One Server, Six Projectors

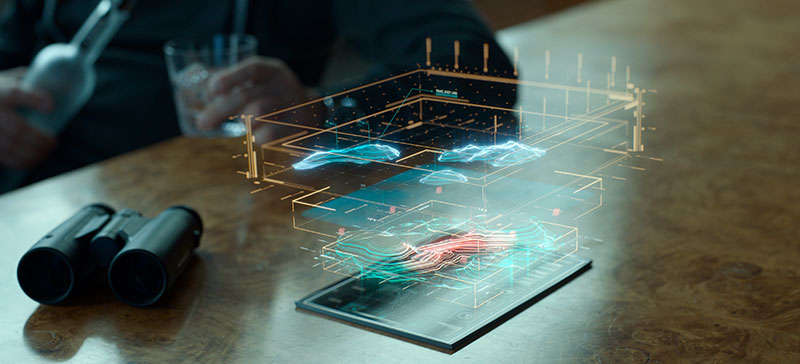

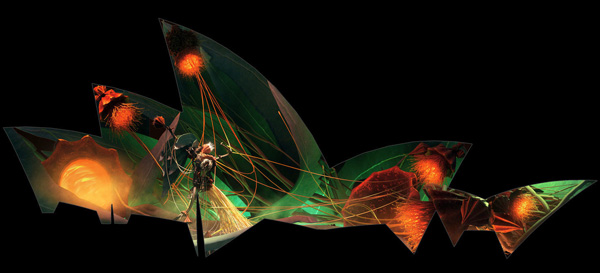

During the ‘Lighting of the Sails’ projection each night, entertainment technology specialists TDC (Technical Direction Company) deployed multiple disguise media server and Barco laser projectors to illuminate and cover the Sydney Opera House sails completely and correctly. Interestingly, since BEMO had no camera moves to work with initially, they constantly varied the camera to work with the animation and composition. The camera position proved to be especially important given the locations of the audience in relation to the sails and also to their irregular shape.

Working from a 3D model of the Sydney Opera House, BEMO used the Foreground object in Cinema 4D to mask out and work with flat graphic elements that represented the sails. Brandon said, "Foreground creates a layer over the whole frame, to which you can apply an image and use it as a texture map – it flattens out the surface and made it possible to calibrate a camera lens that we could use to match the animation to the shape of the sails, looked at from the different perspectives of the projectors. We used it for previs to give a simplified view to people, including our client, for checking the lens calibration and for shot composition."

BEMO created the UV and alpha maps for the sails. Steve Cain, Head Engineer, Media Server Specialist at TDC said, “Brandon and his team at BEMO really knew their stuff and were great for us to work with. We did a lot to help BEMO in the lead-up to this project, supplying Brandon with the 3D model, content templates and positional data. I also coached them on the process of building the projection workflows through to content grading.

"I used our disguise Pre-Viz studio to build the show file, and also to export pictures and fly-throughs to help BEMO understand the layout of the building in the city – they were actually creating the maps while they were still in the US. Doing this also helped us check test content samples to make sure the project would work when we went to site.

"On site at Sydney Opera House, apart from the obvious lining-up task, I also did live colour grading and keyframing of their clips on disguise, working very closely with them to make it look the best it ever could." www.maxon.net

This project is now among the amazing entries in the Live Event category of AEAF Awards. Please visit aeaf.tv for more information and to attend the AEAF Awards Night and Speaker Program.