Riot Control bot

DNEG worked on all eight episodes of ‘Westworld’ season 3, building and animating a range of photoreal robots and futuristic vehicles, and creating set extensions and other invisible effects work. DNEG teams at facilities in both Los Angeles and Vancouver created 3D simulations for destruction and explosions, FX glass and water, and animated the robots by hand.

The ‘Westworld’ episodes were shot both in studios and at several locations, mainly in California but also other states in the American southwest. The production’s VFX Supervisor Jay Worth worked on set, supervising and recording data for the team at DNEG, and worked with executive producer Jonathan Nolan, also a writer and director on ‘Westworld’. At Digital Media World, we talked to Jeremy Fernsler, VFX Supervisor at DNEG.

Robot’s World

The Westworld production shared robot concept art with the team that was fairly complete. But, as usual when moving 2D art into a 3D world, a few design details had to be altered to achieve the required motion, which gave DNEG scope for creativity on the models. Two of DNEG’s three robots, George and Harriet, were quite different from each other, built by different companies with diverging motives.

Westworld character Caleb with co-worker George.

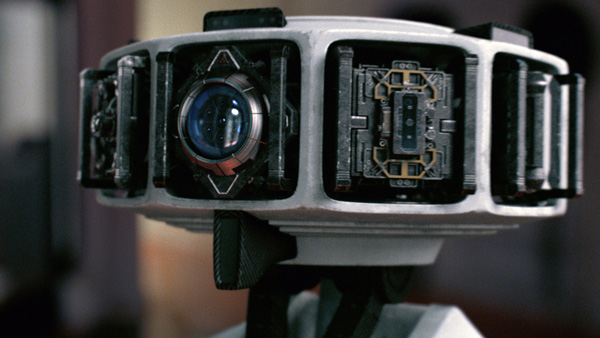

The George robot was a Delos robotics product used purely for building construction. Harriet robot carries out varied services from education and health to manufacture and law enforcement, and is made by Incite, a company that gathers huge amounts of data about humans for use in AI engines. You can see how different the designs are in the images.

During the shoot, stand-in actors were on set performing as the robots to help with actor interactions, and DNEG then replaced them with CG robots in post. Jeremy said, “These robot actors also wore Moven suits to capture their motion for the robot animation. However, in the end, the data needed quite a bit of adjustment work and linkages, and the producers wanted to significantly change some of the performances from the way they had been recorded.

“Keyframe animation gave us a lot more flexibility as opposed to animating on top of the dense motion capture data. Our Vancouver animation team could reproduce a more sparse version of the initial action quickly and then iterate and shape the motion to what production wanted.”

Self-Aware

The robot’s animation was interesting and complex. Their motion needed to be well grounded into the environment and situation, while the animation borrows from the Boston Dynamics style. A Boston Dynamics robot is highly functional but not too precise, so that it seems to be continuously aware of the need to re-balance itself, which gives the impression of self-consciousness. As a result, DNEG’s animation incorporates a range of micro-movements and deliberate liveliness into the robots’ actions.

The George robot who works alongside the character Caleb, for example, has a very repetitive, boring function on the construction site. George is a competent worker but is also intent on aligning its motion to Caleb’s, trying to make the moves match human moves. The Harriet robot’s self-awareness, on the other hand, has a sightly fussy, feminine quality.

As Harriet is a multifunctional robot, DNEG built her head with extra pieces of hardware.

At a turn in the story, the Harriet robot attempts an escape. The guards begin shooting at her repeatedly, which progressively breaks down her exterior over about 30 shots. Jeremy said, “Rather than creating multiple assets for each stage of destruction, instead we chose a procedural approach for the destruction as the bullet holes mount up. One of our Houdini artists crafted a simulation system that allowed us to control the timing and position of each bullet hit. This way we could match to the muzzle flash timing, and adjust the clustering of the hits.

“Once we did this, we maintained a single asset of Harriet going through the destruction which we could bind to the animation of each shot. This system would then simulate the chipping away of the rubber exterior, exposure of smaller electronic pieces below, and their subsequent destructions if need be, gradually exposing the interior robotic structure. With this in place we were able to handle changes to the nature, amount and placement of Harriet’s damage without needing to go back to the modelling stage.”

On set, the robots were played by stand-in actors to help with actor interaction and to record motion reference.

Lean Teams

Each of DNEG’s teams was small, tight and efficient. In Los Angeles, there were two lighters, eight compositors and two FX artists, plus a small animation team in Vancouver. Teams were able to work together efficiently as well. For example, the relationship between the compositing and lighting teams was important for accurate robot integration. Working within a lean regime works well for a VFX-heavy episodic TV project like ‘Westworld’, because the artists need to iterate quickly to finalise assets.

Embedding the robots into shots was critical to making them feel like real pieces of machinery, interacting directly with the environments and the live action characters. Dirt textures were applied to the robot exteriors with special attention paid to the lighting, which was done by artists at DNEG’s Los Angeles office and based on HDRI data recorded on location.

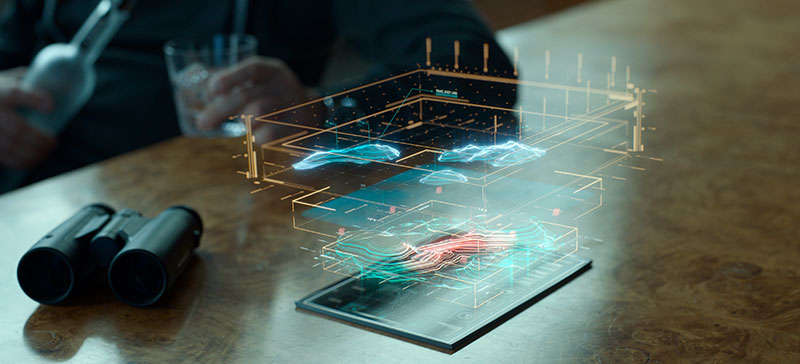

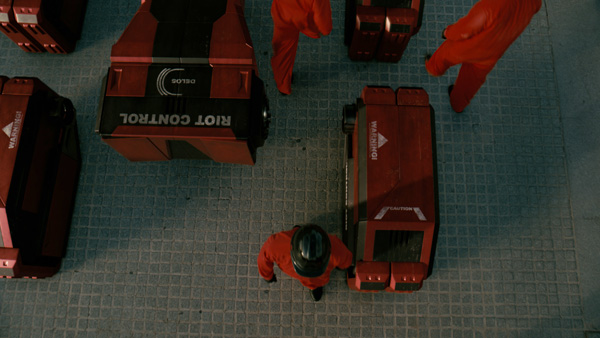

Riot Control

DNEG’s most impressive robot for the show was the Mech, a 14 foot tall Riot Control bot. Not only extremely large and destructive, it is capable of building itself from a collection of parts, whenever and wherever it is needed. The building-up process made an interesting mechanical self-assembly task for DNEG’s animation team in Vancouver. Its body is made from five discreet boxes that are capable of locating each other and assembling themselves into the complete working bot.

“Jay Worth and Jonathan Nolan were frequently decisive and specific about how they wanted effects and characters to look and behave, and in this case, they were certain that they did not want the assembly to feel like a Transformer, nor were the containers meant to simply slide together,” said Jeremy.

The Riot Control bot builds itself from five discreet boxes (below) capable of locating each other and assembling into the complete working bot, seen above and in the image at the top of this article.

“The animation team had some room for creative input for the sequence. They would block out the animation for each container volume as previs, have this approved, and go on to the next. This allowed to them to gain a clearer understanding of the overall motion of the containers and how they could interact with each other. Then they could add and build connector assets to the boxes and back into the assembly action.

“By the time we had approval for every container, filling and flowing to the next, the transition from pre-vis to production animation was straightforward. Not surprisingly, the rigging was highly customised for this sequence. DNEG’s rigging pipeline is well-established to streamline the work and avoid starting every rig from scratch, but the rigging for the Mech assembly was a one-off, singular effort.

Mech in Motion

One of the Mech’s big moments involved crashing into the corridor of a building through a concrete wall. The sequence brought several challenges for all teams. On set, a large, jagged hole had been built into the inner wall, but nothing had been set up for the actors inside to react to at the moment the bot was meant to burst through it.

Jeremy said, “The corridor only allowed limited space in which to fit and animate the Mech, create the destruction simulations and still make a dramatic impact. After replicating the geometry in 3D, the animation was blocked out and completed first, and then this work was handed off to the FX artists to simulate the destruction around the action. The Mech’s movement had to look dangerously nimble and quick, always landing squarely on its feet, despite its size and the need to smash through concrete. Instead of a slow, heavy performance, the artists reflected that impact and weight by animating the bot’s panel work with secondary motion.”

Getting Around

Throughout the series, the characters drive Rideshare vehicles. The actors drove a real vehicle on set as much as possible, which the production would replace in chase sequences with a stunt car fitted with a roll cage and driven by a stunt driver. DNEG built a digital replacement for the stunt car to match the original prop car in those shots. The stunt vehicle had the same kind of wheels and tyres as the original to avoid problems when establishing contact with the street for the digital car.

“We hadn’t expected to need to replace the stunt drivers in the Rideshare cars, thinking that silhouettes would be enough, but this approach was too limiting and meant obscuring the interiors more than we wanted,” Jeremy said. “So we created a minimal kind of digi-double, more similar to a crowd agent with clothing over a generic mesh, than a full character.”

One of the characters drives a futuristic version of a Rezvani Tank, an extreme utility vehicle. Again, the production built a practical version of this car that could be used in most shots – until the story changes course and, in one sequence, the Rezvani is destroyed in a dramatic explosion. These shots were composed of elements from two shoots and two vehicles.

Double Explosion

The production wasn’t able to create any explosions at the location, which needed to serve as a street in San Francisco. So a crew travelled to a parking lot and taped out a stand-in, pseudo set, marking where the trees, street and other geography references existed at the location. Then they drove a Jeep through it, as the car would drive, and exploded the Jeep, achieving a stunt explosion timed and designed to suit the story, which that could composite into the live action plates.

In theory the method was sound, but in practice it was very challenging to put together in post. Not only did it involve two practical vehicles – the Jeep and the Rezvani – but DNEG also needed to insert their digital Rezvani into the fire, full frame, in broad daylight.

Jeremy talked through the post production process. “The plates from the two sets were not perfectly aligned, and no motion control had been used to help with timing and camera angles between the two sequences,” he said. “We also needed to keep as much of of the practical fire from the explosion and create CG fire for the augmentation. We decided to build rough geometry of the set and use projections from either shoot, as required, and created very tight match moves for both sequences, painting in any areas that weren’t covered.

“Once we had both cameras and the practical Jeep match moved, and our environment extensions worked out, our FX artist created a fire simulation that matched the plate explosion. We could place this inside and around our digital Rezvani, which really helped sink it into the shot.

“Finally, our compositing team put in a lot of time ensuring we kept as much of the practical work as possible – including almost all of the on-set fire and smoke, as well as the wheels and tyres of the exploding Jeep. There were lots of moving parts to these shots and it took a while to get things to a place where we all could agree it was going to work out. Looking back now, I think the shots turned out great and the team should be proud of all the work that went into them.” www.dneg.com