Kevin Baillie, production VFX Supervisor, talks here about the effects and animation created for ‘Welcome to Marwen’, in which artists at Atomic Fiction (now Method Studios) realised the director’s vision of transporting the audience into the main character’s model town, populated by a lively cast of plastic dolls. The project involved developing a proprietary technique for facial animation, and a huge amount of control over their CG cameras, resulting in highly creative looks and expressive points of view inside 3D imagery. Kevin and his team earned a VES Awards nomination for their work.

‘Welcome to Marwen’ is based on the true story of Mark Hogancamp, who was attacked and severely beaten up by right-wing thugs who took exception to his fondness for wearing women’s shoes. Following this incident, as well as permanent physical injuries, he suffers from post traumatic stress disorder that has destroyed much of his memory of his earlier life.

Ticket to Marwen

Aiming to heal himself through artistic imagination, he creates a complete, detailed model town at 1:6 scale, which he calls Marwen and populates with doll characters that correspond to people from his life, including himself as Cap’n Hogie. He then photographs the dolls engaaged in dramatic scenarios.

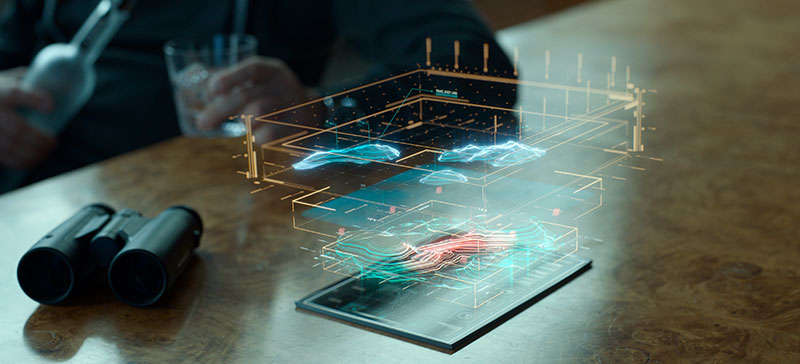

The director Robert Zemeckis wanted the audience to travel to Marwen, inside Mark’s imagination, and experience it from that point of view. In the final film, it occupies 46 minutes of screen time. Achieving this world for the audience required 655 VFX shots, all captured through physical or virtual ARRI Alexa 65 cameras. Atomic Fiction handled 509 of those shots, creating the digital Marwen world and the CG doll versions of 17 characters.

Framestore handled shots for the Marwen opening sequence in which Hogie crash-lands a P-40 aircraft and suffers a Nazi attack. Method Studios, which has since then acquired Atomic Fiction, completed some of the compositing and environmental work for the film.

Expressive Dolls

Robert Zemeckis brought the script to Kevin for the first time in 2013, mainly concerned that the actors playing the roles of the dolls would lack the means to express themselves effectively. He had worked with Kevin before on movies including ‘The Walk’, ‘Flight’ and ‘Allied’.

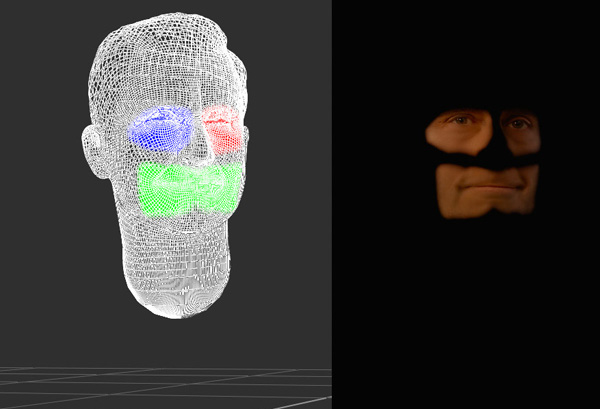

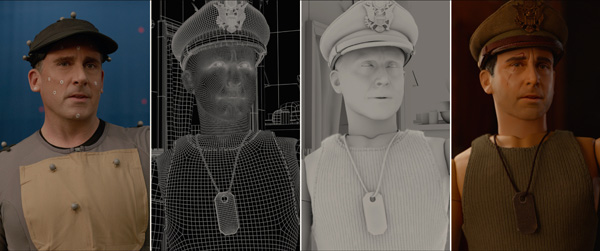

“Traditional motion capture wasn’t an option for these faces, so our original idea was to shoot the actors in costume and augment them with digital doll joints,” said Kevin. “That failed horribly, but it inspired us to try the opposite – instead of sticking CG parts on actors, we’d try augmenting the CG dolls with actor parts. We fused lit live-action footage of actor Steve Carell’s facial performance with the underlying doll structure - and it worked!”

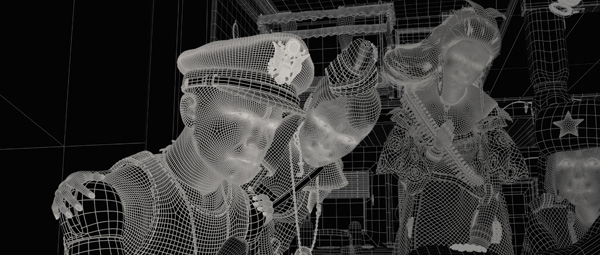

This approach, of course, meant building the physical dolls first. Each of the story’s 17 doll characters started as a 3D scan of its associated actor, and was adapted into doll form and digitally sculpted by Atomic Fiction. Miniature Effects Supervisor Dave Asling and his team of dollmaking artists at Creation Consultants then digitally re-engineered the sculpts, 3D printed, moulded, pressure-cast, hand-painted and hand-finished them. Atomic Fiction then reversed the process by creating fully rigged and detailed CG versions matching their physical counterparts exactly, down to every texture and element.

Facial Fusion

Kevin and Atomic Fiction then needed to develop a way of blending the actors’ performances with the dolls. Animating the characters and finalising shots required a proprietary workflow combining motion capture, real and CG photography, CG animation and compositing. Motion capture was the basis for their body performances. To drive the dolls’ faces and the CG cameras, beauty-lit footage was shot on the Alexa 65 and their facial performances were also recorded with motion capture.

“Because the dolls’ faces were going to be a fusion of the actors’ actual performances and CG animation, the actors were in full make up during the mocap shoot and scenes were lit for the Alexa 65, just as you would light a practical set. Fortunately, we were able to map out all the lighting ahead of time - we built a real-time set for every mocapped scene and optimised it for the Unreal Engine. Then our DP C. Kim Miles used an iPad with custom controls to pre-design all the lighting before we shot a single frame.

“In a way, the process was like lighting previs. The virtual production process that we developed helped us ensure that the practical lighting on the actors looked great when expanded out to illuminate the entire world. In fact, an exact match of our CG to what was shot on stage ended up being extremely important because, as we discovered in post production, when the direction of the light was even the tiniest bit mismatched, the characters would look very weird and the whole illusion would break.”

Animating from the Soul

Ultimately, Atomic Fiction’s goal was to bring the soul of the actors into the doll performances. They did this by projecting the actual footage of the actors’ faces, shot with the Alexa 65, onto the digital dolls. Every shot has a virtual camera through which the artists render the digital world, which lso sesrves as a ‘projector’ that pastes the live-action actors’ faces onto the doll bodies.

“In order for that camera-projector to precisely place the footage onto the doll faces, we need to know exactly where the camera is in space, relative to the actors’ heads. Placing motion capture markers on the camera does that for us. From there, we hand keyframed the doll faces to match the actors’ performances, then subtly layered the underlying CG renders with specular and reflection components to give that plastic, doll-like appearance.”

They could use the actors’ body movements in a more traditional mocap workflow but even so, the resulting animation data required significant retargeting. First, since all the dolls are the same size and the actors’ heights vary by as much as 30cm, the animators needed to add subtle squash and stretch to achieve the right proportions. Furthermore they had to strike a balance in the dolls’ movements to make them rigid enough to be believable as dolls, but flexible enough to adopt certain poses and replicate what the actors did on set.

The miniature Marwen town was precisely handbuilt by the model-making team, who populated it with created and found objects. It was 3D scanned, photographed and turned into an accurate digital Marwen at Atomic Fiction. Kevin said, “The director’s vision was to take the self-healing narrative that Mark created through his photography and faithfully recreate it. The result is that all of it feels alive because, in part, it actually is.”

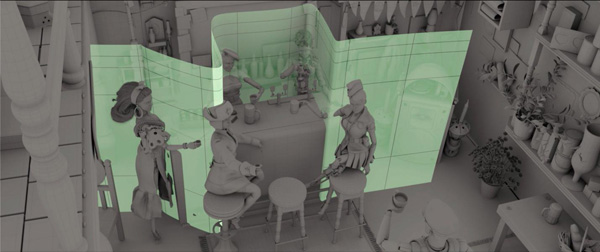

Lighting, depth-of-field and facial composites were all equally important to the integrity of the final result. Therefore, to be able to monitor everything at once, Atomic Fiction re-organised the typical VFX workflow and had all of their departments working together in parallel. Robert Zemeckis could also review the footage, with all components present in context, from the first take.

New School, Old-School Photo Tools

Using digital versions of traditional photographic tools, the artists were able to maintain the tactile look of Mark Hogancamp’s photography. Keeping renders in focus was a major challenge in the doll’s world because everything was built at one-sixth scale. When filmed with an Alexa 65, which has very large sensor and thus a very shallow depth of field, the props and characters quickly became soft. “To keep hero actors in focus even when they were offset from one another in relation to the focus plane of the camera, we developed our own tools, which were essentially digital versions of traditional optical tools like tilt shift lenses and split diopters,” Kevin said.

“We also created a new piece of tech that I really love because it’s new-school born from old-school, called a variable diopter. It allowed us to sculpt and animate a curtain to set focus distance across the frame. For example, when five of the dolls are sitting in a u-shape around the bar, we were able to keep them all in focus and leave the image looking soft around them.”

Atomic Fiction’s team also developed another photographic tool to help them match the looks in the filming locations in Vancouver. Noticing that all of the exterior shots featured pollen, flying insects and various bits of plant life floating through the air, they wanted to replicate that look in their virtual scenes, but lacked the time and budget to use complex 3D simulations for every shot. Kevin said, “Our compositing team eventually devised a system that allowed them to fill the camera’s view with dozens of 2-dimensional ‘cards’ with pre-created animations of floating pollen on them. We used similar techniques for floating dust in the interior shots. I feel the result really adds subtle depth and volume to the doll world.”

Blended Morphs

Sometimes they found they needed to blend two performances from an actor’s live action material together in a plate. While blending two mo-cap performances together is a fairly straightforward part of an animator’s work, blending the straight live action together was trickier, so much so that the director decided it deserved a special term - blorphing.

“Blorphing is our technique of blending/morphing shots (hence blorph) to create a seamless transition. We used it often as an editorial tool. Sometimes Bob (Zemekis) wanted to remove a line from the middle of a shot, play two separate takes as one, or just tighten up a performance by removing a section from the middle. Blorphng allows us to do it without the audience even noticing.”

In the doll world, the characters’ bodies are all digitally rendered and so blorphing wasn’t an issue - they could animate the transitions invisibly prior to rendering - but, since they were using real footage of the actors’ own faces, they had to blorph footage of faces to execute an in-shot edit. Outside of purely editorial purposes, this technique was also used to invisibly link together multiple, totally different shots during Mark Hogancamp’s drunken flashbacks as he struggles with his painful memories – work that was overseen by Method VFX Supervisor Sean Konrad and his team.

Not Just a Dream

Sean said, “The scenes in which Mark is trying to recall his past trauma, but his memory is hazy, were really interesting to work on and also rather complex. We created a heavily stylised, very dreamlike quality by stitching together elements from three or four different plates, and fading portions of those layers in and out, giving the audience a slightly drunken feeling.”

Method also focused on recreating New York’s Meatpacking District in shots that were filmed in Vancouver’s Railtown. The location was selected due its industrial feel and overall ambience, although Vancouver lacks the skyscrapers of New York due to building height restrictions. Method took the bluescreen plate footage and integrated digital skyscrapers that they built based on reference photography and scans of New York streets, and added other touches like digital store fronts and additional traffic to complete the illusion. www.atomicfiction.com