disguise and Move.ai developed Invisible real-time markerless motion capture that transfers movements automatically in real time onto a character rig inside disguise’s Designer software.

disguise has developed Invisible, a complete system for real-time markerless motion capture, in partnership with Move.ai. Without having to rely on markers, dedicated suits and complex calibration procedures, Invisible allows users to automatically transfer their movements in real time onto a character rig inside disguise’s Designer software.

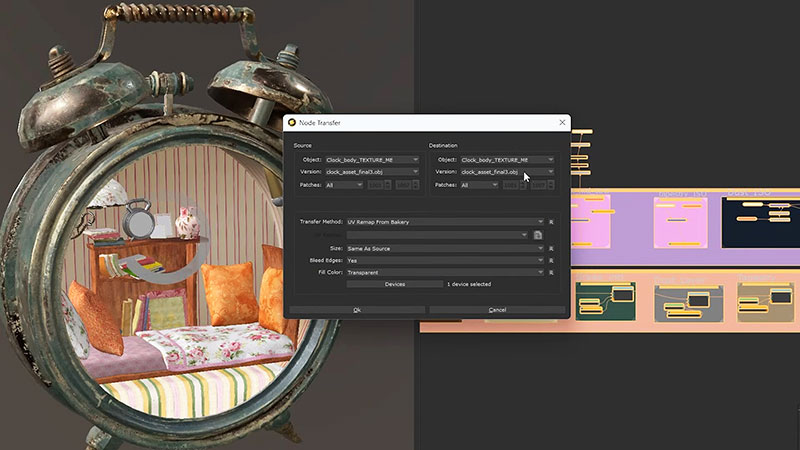

The system, able to track up to two people at once, uses Move.ai’s Invisible real-time markerless mocap software to extract natural human motion from video using artificial intelligence that is based on computer vision, biomechanics and physics. Once the motion is in the Invisible software, it is visualised in real-time as a skeleton in Designer. In one click, the skeleton rig is transported into Unreal Engine and used to achieve the desired effect before mapping it out to the LED screen.

Move.ai’s deep learning models are used to teach the system how to detect key points on the human body within 2D images captured from multiple angles, which then triangulate the location of those points within the 3D space. This data is then passed through a neural network to apply biomechanical and kinematic models, resulting in a lifelike representation of the actor, their movement and the forces they apply to the ground. This data can then be streamed into a 3D engine.

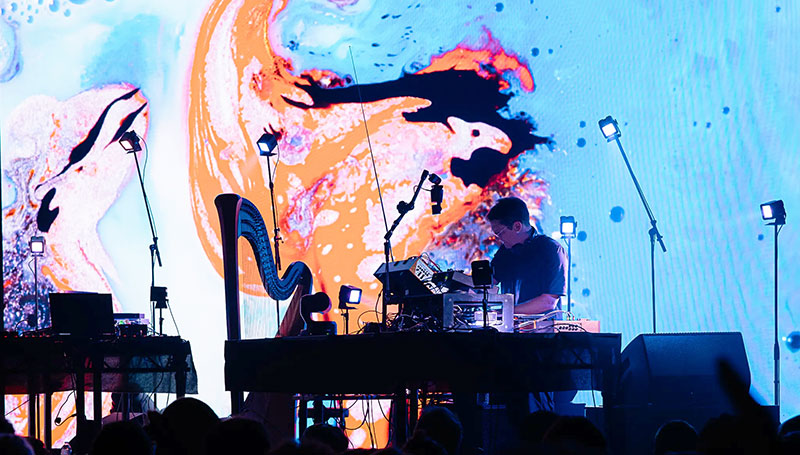

The partnership with disguise makes it possible to use the captured motion in more immediate applications – live broadcasts, concerts and stage performances, and film sets. The goals are speed, ease of use and cost-effectiveness.

Live Motion

For broadcasters, the motion capture data can be used to drive gesture-triggered graphs and scene changes, or accurately cast the talent’s shadow onto a 3D set. Filmmakers can capture motion for 3D characters that interact directly with real actors, or trigger the animation of effects like smoke and fire. At live events, the Invisible system can transport performers into a game universe populated by avatars that instantly replicate their movements, without using mocap suits.

disguise RenderStream

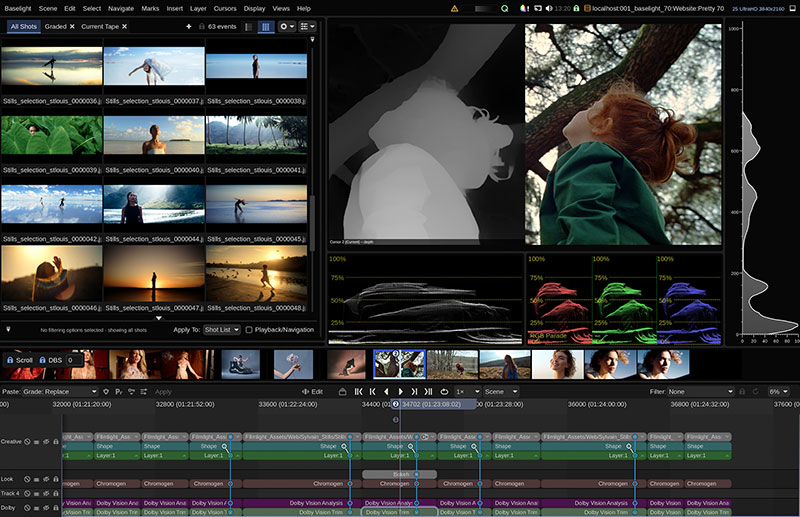

Integrating the motion capture data directly into the disguise Designer software gives the operator control over the performance, and makes it visible throughout production in the CG characters. The Invisible software also integrates with the scalable processing of disguise hardware, which maintains the high-fidelity quality of the real-time output to LED stages and virtual production volumes of various sizes.

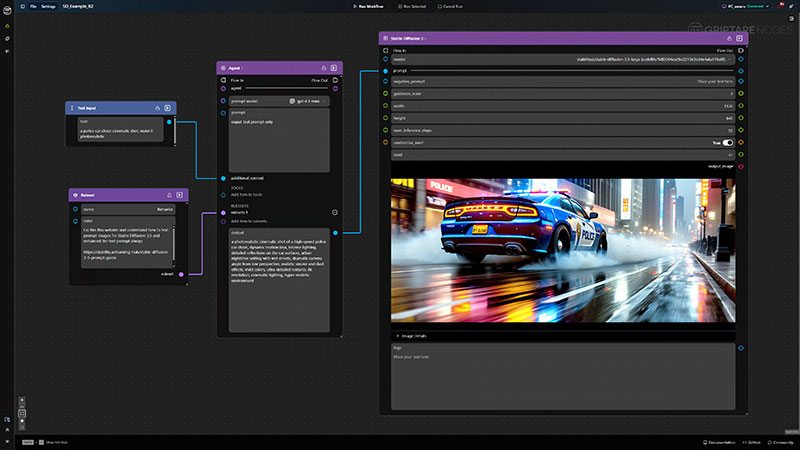

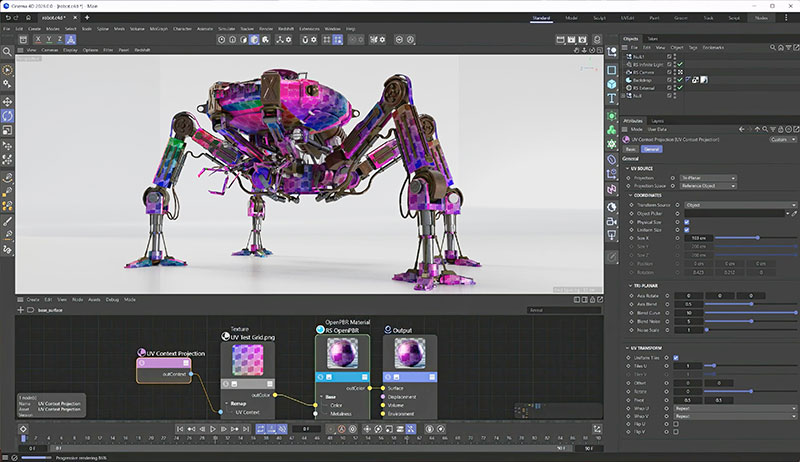

At the same time, disguise’s RenderStream protocol ensures the transfer of skeleton data across the disguise Unreal Engine rendering cluster, synchronising content and tracking data more accurately during production and better merging the physical and virtual world. RenderStream integrates the render environment – the disguise processing hardware and Designer software, content engines like Unreal, and camera tracking. In effect, it detaches the rendering power from your output, distributing power across multiple render nodes so that graphics are processed with enough performance to achieve the required look.

Invisible in Action

Apart from logistical advantages of allowing natural performances, quicker set-up with fewer crew, Invisible may help save time and money by reducing the need for post-production work, or to transport cast and crew to new locations. Creative and technical teams can avoid common motion capture and graphics rendering challenges like latency, sensor drift, interference and lengthy data cleanup. It can also support a level of automation for live motion graphics, which are normally choreographed and controlled manually by an operator working with a tracking system. In some cases, the animation can be triggered automatically through movement.

To demonstrate the disguise and Move.ai integration, experimental creative studio FutureDeluxe took its multi-platform Spirits 3.0 avatars into Unreal Engine. It then built a custom environment for an interactive, markerless motion capture experience on stage at the 2023 Cannes Film Festival in May.

FutureDeluxe

Svet Lapcheva, Executive Producer / Client Partner at FutureDeluxe, said, “Markerless motion tracking in Unreal Engine opens up vast creative possibilities that allow directors, designers and artists to achieve much more in a faster and more reliable way, while seeing a final quality product at every stage of creation.”

Invisible Bundle

Supported by disguise exclusively, the Invisible system includes disguise MX Move data processing hardware purpose-built to run the Invisible software, FLIR Blackfly S GigE machine vision cameras, TAMRON 2.8mm, Goyo 3.5mm or LCM 4mm fixed focal length camera lenses, size depending on the size of the LED volume, and a Netgear MS510TXPP network switch, supplying power over Ethernet for the cameras.

The bundle is available in three different sizes – small, medium and large depending on the size of the stage and the number of performers to track. To enable real-time markerless motion capture, at least one disguise vx or gx machine is also needed for graphics processing, and one disguise rx II for real-time rendering. www.disguise.one