Unreal Engine 4.27 is now available with updates supporting filmmakers, broadcasters, game developers, architectural visualisation artists, and automotive and product designers.

In-camera VFX

The use of in-camera VFX is now more efficient, with results of a quality suitable for wider applications such as broadcast and live events.

Designing set-ups in nDisplay, Unreal’s tool for LED volumes and rendering to multiple displays, is simpler to manage due to a new 3D Config Editor. All nDisplay-related features and settings are placed in a single nDisplay Root Actor to make them easier to access. Setting up projects with multiple cameras is also easier.

nDisplay now supports OpenColorIO, improving the accuracy of the colour calibration, which associates content creation in Unreal Engine with what the physical camera captures from the LED volume.

For efficient scaling in nDisplay, multiple GPUs are supported. This also makes it possible to make the most of resolution on wide shots by dedicating a GPU for in-camera pixels, and to shoot with multiple cameras, each with its own uniquely tracked field-of-view.

A new drag-and-drop remote control web UI builder is now available to help build complex web widgets without writing code. This makes it possible for users without Unreal Engine experience to control their results from the engine on a tablet or laptop.

Camera Control

Also, the Virtual Camera system built for Unreal Engine 4.26 now includes Multi-User Editing, a redesigned user experience and an extensible core architecture – that is, it can be extended with new functionality without modifying the original codebase. A new iOS app, Live Link Vcam, is available for virtual camera control – users can drive a Cine Camera inside Unreal Engine using a tablet or other device.

A new Level Snapshots function will save the state of a given scene and later restore any or all of its elements, for pickup shots or as part of an iteration phase. Users also have more flexibility when producing correct motion blur for travelling shots that accounts for the look a physical camera would have with a moving background.

Recently, Epic Games and filmmakers’ collective Bullitt assembled a team to test all of these in-camera VFX tools by making a short test piece following a production workflow.

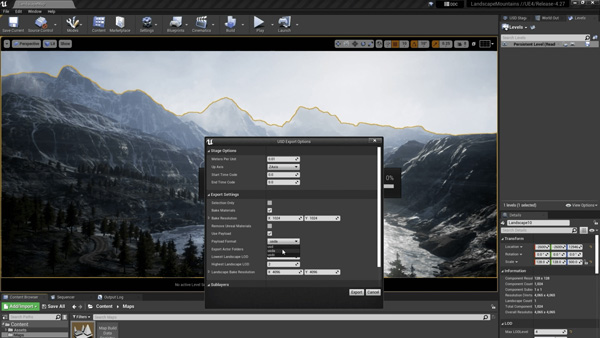

USD, Alembic and Workflow Connections

With this release, it’s now possible to export a bigger variety of elements to USD, including Levels, Sublevels, Landscape, Foliage and animation sequences, and to import materials as MDL nodes. You can now also edit USD attributes from the USD Stage Editor, including through Multi-User Editing, and bind hair and fur Grooms to GeometryCache data imported from Alembic.

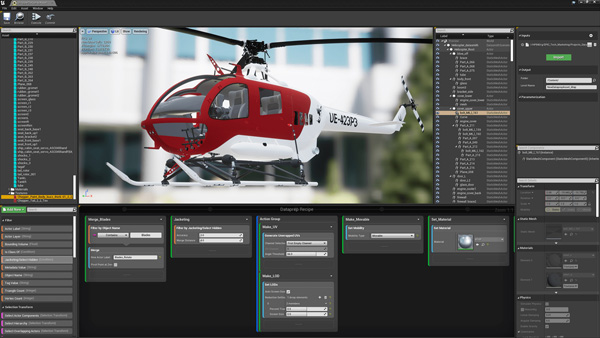

Datasmith is Unreal’s set of tools for importing data from various sources. In 4.27, Datasmith Runtime allows more control over how the data is imported, including access to the scene hierarchy and the ability to import .udatasmith data into a packaged application built on Unreal Engine such as the Twinmotion real-time architectural visualisation tool, or a custom real-time design review tool.

A new Archicad Exporter plugin with Direct Link functionality is available, and Direct Link has been added to the existing Rhino and SketchUp Pro plugins. Datasmith Direct Link maintains a live connection between a source DCC tool and an Unreal Engine-based application for simpler iteration. You can also aggregate data from several sources, such as Revit and Rhino, while maintaining links with each DCC tool simultaneously.

GPU Light Baking

Unreal Engine’s GPU Lightmass uses the GPU instead of CPU to progressively render pre-computed lightmaps, using new ray tracing capabilities of the DirectX 12 (DX12) and Microsoft's DXR framework. It was developed to reduce the time needed to generate lighting data for scenes that require global illumination, soft shadows and other complex lighting effects that are expensive to render in real time.

Also, since the results can be seen progressively, the workflow becomes interactive. Users can stop, make changes and start over without waiting for the final bake. For in-camera VFX and other work, GPU Lightmass means that virtual set lighting can be modified much faster than before, for efficiency.

VR, AR and Mixed Reality

Support for the OpenXR framework, ready for production, is now added to make creating extended reality content – VR, AR and mixed reality – in Unreal Engine easier. OpenXR simplifies and unifies AR/VR software development, so that applications can be used on a wider variety of hardware platforms without having to port or re-write code, and compliant devices can access more applications.

The Unreal OpenXR plugin allows users to target multiple XR devices with the same API. It now supports Stereo Layers, Splash Screens, querying Playspace bounds to determine what coordinate space to play the camera animation relative to. Extension plugins from the Marketplace are available to add functionality to OpenXR without waiting for new game engine releases. The VR and AR templates have a new design with more features built-in and faster project set-up functionality.

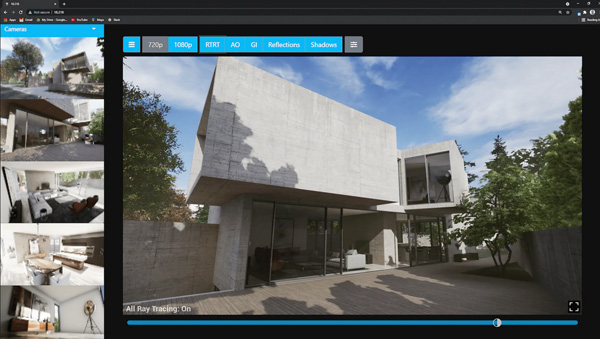

Containers in the Cloud

Epic Games has continued to develop Pixel Streaming, which is now ready for production and has an upgraded version of WebRTC. It enables Unreal Engine, and applications built on it, to run on a cloud virtual machine and to allow end users, anywhere on a regular web browser, to use it as normal on any device. 4.27 also has Linux support and the ability to run Pixel Streaming from a container environment.

This new support for containers on Windows and Linux means that Unreal Engine can act as a self-contained, foundational technical layer. Containers are packages of software that encompass all of the necessary elements to run in any environment, including the cloud.

Container support includes new cloud-based development workflows and deployment strategies, such as AI/ML engine training, batch processing and rendering, and microservices. Continuous integration/continuous delivery (CI/CD) can be used to build, test, deploy and run applications in a continuous process. Unreal Engine containers can support production pipelines, develop cloud applications, deploy enterprise systems at scale and other development work. www.unrealengine.com