Pixotope, the live photo-realistic virtual production system from The Future Group, now runs on version 1.3 software, updated to help creators improve interactions between their virtual environments and the real-world elements.

Pixotope forms the central production hub when creating mixed-reality (MR) content for broadcast and live events, and has tools that help producers bring together physical components, such as presenters, actors, props and free-moving cameras, effectively with virtually created assets such as backgrounds, graphics, animated characters or any other CG elements. Version 1.3 has new object tracking and lighting integration functionality, and more control over colour management.

The Future Group’s Chief Creative Officer Øystein Larsen said, “The success of a mixed reality scene depends on the relationship and interactivity between real and virtual components. Part of this success depends on technical accuracy, such as matching lighting, replicating freely moving cameras and precise, invisible keying. But there is also an emotional aspect which flows from enabling presenters and actors to freely express themselves through unrestricted movement, and interacting with virtual objects as they would real objects. Version 1.3 of Pixotope improves on results in both of these areas.”

Using Real-time Tracking Data

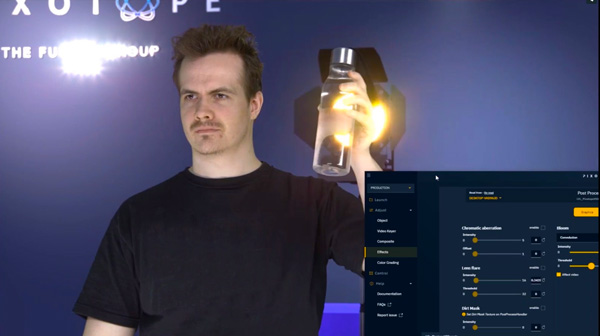

A major advance is in the ability to integrate and use data from real-time object tracking systems. This allows Pixotope to use the positions of moving tracking locators in the real world environment and attach them to digitally created objects, so that those objects can be made to follow the tracked motion. This means presenters can pick up and rotate graphics or any other virtually generated asset in a natural, expressive manner, opening many more creative possibilities. From showing a 3D model in the palm of their hand, to controlling any aspect of a virtual scene with their own physical movement, presenters and actors become free to interact with the virtual world around them.

Another advantage of Object Tracking is that presenters themselves can be tracked, so that Pixotope is continuously aware of where in the scene they are. A challenge in normal virtual studios is that presenters must be careful about where they stand and when. Presenters cannot walk in front of graphics that have been composited over the frame, for example. However, when accessing an object's position and orientation through Pixotope’s Object Tracking interface, Pixotope recognises where the presenter is relative to the position of other generated items within three dimensional space and handles the occlusions accordingly. Actors are free to walk in front, behind or even through virtual objects.

Matching Lights and Colour

Also new in Pixotope Version 1.3 is the ability to control physical lights using DMX512 over the Art-Net distribution protocol. This enables Pixotope to synchronise and control any DMX controllable feature of physical studio lights with controls from the digital lighting set-up used to illuminate virtual scenes. As a result, light shining on the real set will match the light in the virtual scene. Lights can then either be driven via an animation pre-set, or by using a new Slider widget available for user-created Pixotope control panels. These panels can be accessed via a web browser on any authorised device and be operated either by a technician or the presenter.

Pixotope Version 1.3 also further improves the results of chroma keying for green screen studios with new functions that help extract greater detail, like fine strands of hair and shadows. New algorithms process key edges to sub-pixel accuracy, improve colour picking and automate the reduction of background screen colour spill.

Colour Management control can now be accessed in Pixotope’s Editor Viewport to make sure that artists working in any practical colour space, including HDR, feel confident of the colour fidelity of the images they are creating.

Pixotope is natively integrated with the Unreal game engine, and in Pixotope 1.3 all the new functions in UE Version 4.24 are available to users. The changes include layer-based terrain workflows that help create adaptable landscapes, dynamic physically accurate skies that can be linked to actual time-of-day, improved rendition of character hair and fur, as well as increased efficiency for global illumination that helps to create photo-real imagery. www.futureuniverse.com