Jason Katterhenry’s IT approach to production storage relies on the visibility Qumulo Core delivers to help manage performance and capacity for Arizona Public Media’s editorial team.

Jason Katterhenry has been working at Arizona Public Media, a PBS and NPR affiliate, for the past 17 years as Director of IT Operations. His long term involvement with the company’s information technology gives him an interesting perspective on their three television stations, four radio stations and an online news outlet. About four years ago they faced cost, capacity and customer service issues with their technology vendors, which led them to start evaluating new storage systems.

Their operations at that time had been organised over two separate shared file systems. One powered their operation infrastructure and the other connected staff to their editing suites.

“It was hard to get information about what was going on when a problem emerged. The troubleshooting step was very difficult,” Jason said. Adding capacity or throughput was costly as well, especially because downtime was required to restructure the system properly. The system also couldn’t handle the workloads associated with regular peak periods, which caused frustration among the team and management, productivity issues and delays.

“We produce two TV shows with deliverables due on Fridays. Inevitably everyone would wait until the last minute, needing four or five workstations trying to push out 4K video. The system would grind to a halt and we would have to export in series, rather than in parallel.”

An IT Eye View

Jason evaluated Qumulo Core, data lifecycle management tools used to store and manage active file data in a single namespace with intelligent scaling. Users can run IT operational analytics of all files and users in real-time and have an API, with support for different protocols, to build automated workflows and applications. Eventually, Arizona Public Media moved to a Qumulo system.

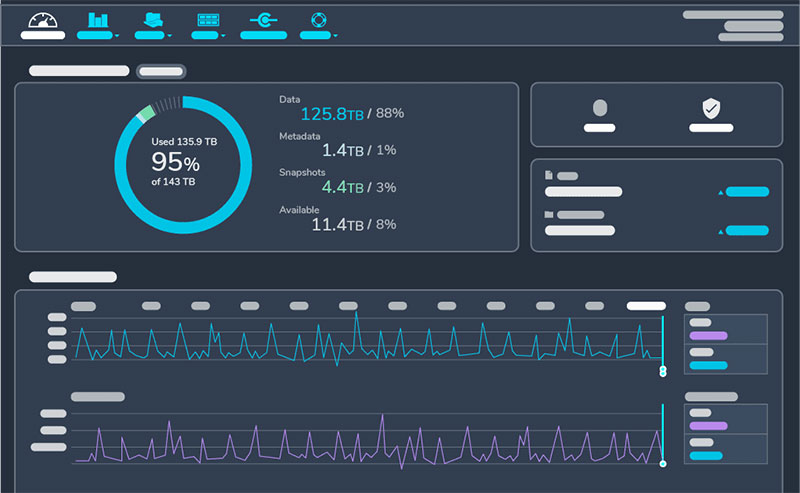

Jason likes the Qumulo dashboard which displays an overview of storage server cluster analytics and data, visualising their storage usage information in one place – capacity information, throughput activity, client connections and so on. Clustering is the use of two or more storage servers working together to increase performance, capacity or reliability, meanwhile distributing and managing workloads between the servers. The server analytics are refreshed every five seconds, which is essentially real time – in short, Qumulo dashboard prevents data blindness.

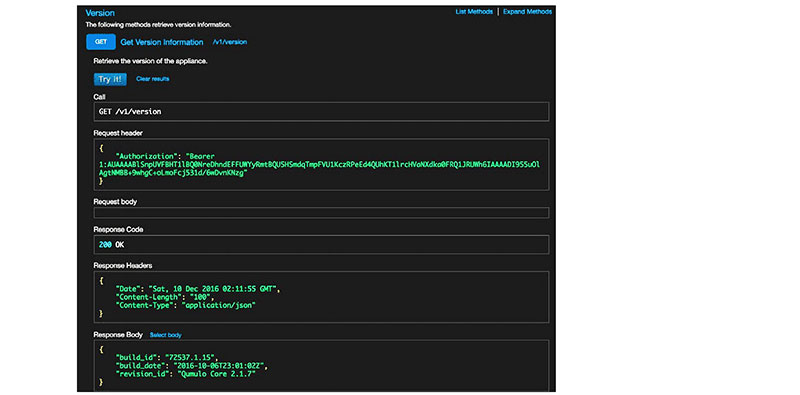

Immediate insight into throughput trending and hotspots means the user can set real-time capacity quotas, instead of spending time provisioning quotas based on past performance. Information is accessible through a GUI, and a REST API allows users to access the information programmatically. RESTful APIs use HTTP requests to access and use data. The data can then be used to GET, PUT, POST and DELETE data types – that is, reading, updating, creating and deleting operations concerning resources.

Team of Two

The ability to continuously and closely monitor capacity usage had not initially been a priority for Jason when he began assessing storage options. But when he saw Qumulo’s features for such tasks, he recognised their value. They would make the system relatively easy for him to manage, which is important because he is part of a team of just one other person.

Qumulo REST API – executing calls against the live cluster.

Two relatively new Qumulo features also help small teams manage the dynamic nature of broadcast storage systems. One of them addresses the need to identify cold data that has not been accessed for some time, and move it to a less expensive storage option. Many storage vendor have ways of handling this task, but Qumulo’s new implementation of a last access time for data has been built to balance flexibility with performance – that is, more data management control for administrators without sacrificing performance. Time granularity ranges from hourly to daily or weekly, plus a cluster-wide setting with a default of ‘off’.

Qumulo’s new write allocation algorithm helps deal with the fact that storage systems become slower over time, especially regarding rapid data ingest. Mainly due to fragmentation, which spreads data unevenly across a namespace, complex calculations occurring in the middle of a write operation can slow the system down. The typical solution was running costly defragmentation jobs in the background to reclaim performance. Instead, the new algorithm maintains long term performance by placing data in the optimal location across a cluster and intelligently thinking ahead to ensure low latency writes, no matter the age of the cluster.

Ongoing Operations

Other Qumulo Core features that Jason likes but hasn’t had access to previously include audit logging, which tracks filesystem operations. As connected clients issue requests to the cluster, log messages are generated describing each attempted operation.

SSD caching in Qumulo Core uses SSDs to store data layout information, to serve as a fast persistence layer for data modifications and to cache file system data and metadata for fast data retrieval. In contrast, the HDDs are used to store data that is accessed less frequently – the data is still available, but not taking up space on the SSD.

Tuscon, Arizona

Several types of replication, including versioning, failover and failback and continuous replication are available, and instantaneous snapshots that write data alongside the original data. This process means no storage is consumed when a snapshot is taken, and no performance penalty occurs when taking a snapshot or when reading and writing data from the filesystem in the presence of snapshots.

S3 Shift

Most recently, Arizona Public Media have started to use Qumulo Shift for AWS S3, a free cloud service available as part of the file system that gives users access to AWS cloud applications and services with their file data. So far the organisation has used it for disaster recovery and making media files available to producers working remotely, not actually using it for editing but as a media transfer mechanism.

Qumulo released their first S3 support earlier in 2022 and are planning to develop it further. S3 is rapidly gaining acceptance as a protocol for unstructured data for many uses, like internet storage, backup and disaster recovery, analytics and archiving. It has also been adopted by the major cloud and on-premises object storage providers. Mainly though, for a company like Arizona Public Media, support for S3 increases options.

It means they will be able to run built-for-cloud applications on premises for better performance, security or optimised cost. It will allow them to support hybrid file and object workflows through the same storage system, simplifying workflows and avoiding unnecessary data movement. An example is the ability to run Apache Spark workloads directly against a Qumulo cluster using S3 connectors. S3 also works well with Qumulo’s other protocols – users can now access their data via SMB, NFS, REST, FTP and S3.

Staying Up to Date

Finally, Qumulo frequently and continuously delivers upgrades and, in turn makes upgrading at the customer end very simple. For instance, the software is containerised so that upgrading to a new version is nearly instantaneous, and a dedicated user interface shows exactly where the system is up to in the upgrade process.

Most recently, Qumulo added a new rolling option for the fairly rare occasions, such as a firmware upgrade, when users need to reboot of the underlying platform. This new experience is fast, simple, keeps the cluster online and is non-disruptive for NFS (Network File Sharing) protocol clients. As a result, Qumulo has determined that at least two thirds of their users are running software less than 6 months old. qumulo.com