Origo Studios, one of Europe’s largest film studios, upgraded its storage systems and scaled its post environment through cloud services to handle its growing volumes of unstructured data.

Production and post facility Origo Studios in Budapest, one of the largest film studios in Europe, has upgraded its storage systems and scaled up its post production environment. They have incorporated cloud services to help handle the rapidly growing volumes of unstructured data generated by the productions its team works on.

The studio opened in 2010, and soon made a name for itself with its work on blockbusters like Inferno (2016), Atomic Blonde (2017) and Blade Runner 2049 (2017) Most of the interior scenes of Dune, for example, were shot at Origo. With 10 stages, including dedicated greenscreen stages optimised for visual effects, plus a modern, full service post production house called Origo Digital Film, the company is considered a Hollywood-standard filmmaking facility.

Origo Digital Film’s services are led by László Hargittai, a recipient of the Béla Balázs Award, Hungary’s most prestigious filmmaking award. The team oversees 16mm and 35mm negative processing, 2K and 4K dailies and final finishing support for the facility’s feature film, TV and commercial projects.

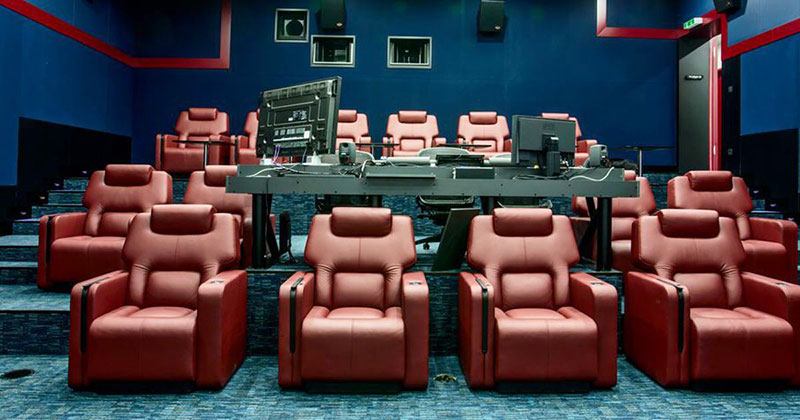

Inside Origo Digital

For scanning and recording, Origo Digital operates a DFT Scanity 4K real-time scanner, ARRISCAN and ARRILASER 2 HS scanners. Its editorial teams work in Avid or Adobe Final Cut Pro, collaborating through shared storage. VFX and online finishing for film and TV are performed in 2K/4K, HD and SD, and other finishing tasks can be supported using uncompressed 4:4:4 signal processing. The studio’s post teams are skilled in Autodesk Flame, Baselight and ProTools.

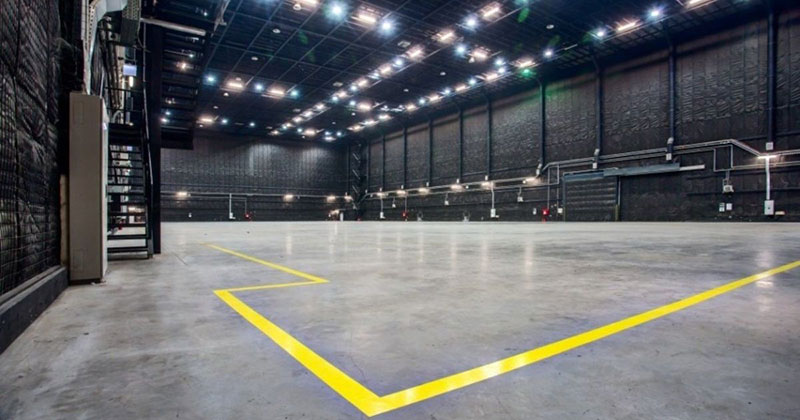

Sound stages

The team specialises in encoding video masters to a variety of file formats needed for streaming and broadcast playback devices, or for further work on clients’ computers downstream. Origo operates a highly secure fibre-based global data delivery pipeline, sending files over internet to support dailies, and runs a high-speed dedicated network for remote colour correction and studio approvals. Its two client screening rooms are capable of 35mm, 2K/4K data and video projection, supporting two digital intermediate suites.

Across all of these operations, Origo needs to rely on a fast storage system that supports constant data transformation between formats without dropping frames or holding up processes. Consequently the studio has worked through several changes to its storage infrastructure. László said, “At the time we opened in 2010, our studio was using an Isilon storage system. But at about the same time, Dell acquired Isilon and shortly afterward began to take the development of its systems in a direction that no longer suited our purposes as well.” The studio realised that to make their operation sustainable, they would need to find an alternative, and considered various options, including the cloud.

Connecting Edge to Cloud

They adopted the HPE Greenlake hybrid cloud system, which organisations like Origo use to connect their teams’ data, wherever it is stored, to a private data centre in the cloud. HPE calls Greenlake an edge-to-cloud system, defining edge as the point where data is first collected, generated or stored – from cameras and other kinds of sensors, scanners and finishing suites like those described above, to servers and small data rooms.

László Hargittai, Head of Post, Origo Studios. (Image: Zoltán Havran)

However, while sensors are becoming more sensitive, and productions are more numerous and generating more data, the cost of bandwidth isn’t decreasing at an equally fast rate. Since moving data is still very expensive, collecting and processing it locally can be more cost efficient than trying to centralise processing in the cloud.

At the same time, applications requiring low latency are expanding, including the post services Origo carries out. When organisations lack the time to send data to the cloud and wait for results, processing power at the edge becomes valuable. HPE Greenlake aims to bring a cloud-like experience to the edge and increase the potential for new low-latency services. Its edge computing approach is characterized by high bandwidth, ultra-low latency and real-time access to network information that can be used by several applications.

File Data as a Service

Origo recognised that HPE Greenlake’s storage environment and functionality has features that the facility needed, but at first found it a challenge to manage and control their files and file data. Their team was encountering bottlenecks when storing 4K images generated by their Scanity system. During grading sessions, the playback of uncompressed 4K images was not real-time, and frames were dropped.

This situation changed when they began using Qumulo’s file data software, which they found could be delivered as-a-service through the HPE GreenLake platform. “For upcoming film ‘Poor Things’, for instance, Origo served as dailies processor. The project was shot on film, coming in at 170MB/frame that had to be played back in real time across six workstations,” László said. “We were working with uncompressed DPX or EXR files, sometimes even playing the footage back in reverse.

“The performance we achieved with Qumulo and HPE GreenLake really impressed us. We’re now able to perform tasks like colour grading without dropping any frames. It’s making the whole post production process faster and more efficient including film scanning, dailies processing, editing, audio-post and colour finishing. In particular it has made a noticeable difference for our team and helped keep our production deadlines on track.”

Cloud-based File System Layer

While HPE Greenlake looks after the backbone of the post environment – bandwidth, power, number of machines and so on – Qumulo functions as the software file system layer, simplifying the management and optimisation of a storage system, and managing the quantities of data, regardless of its physical location. Built into its file data platform are tools for managing the data lifecycle from ingest and transformation, to publishing applications and finally to archive.

It operates through services – dynamic scaling to store huge amounts in a single namespace, caching to support high-compute workloads and thousands of active users, with monitoring and visibility using real-time analytics.

Through its APIs, it can be integrated into and used to automate workflows and applications. Qumulo also has data protection features to support disaster recovery, backups or user management, plus automatic data protection from external threats through encryption. Also important in Origo’s case, Qumulo can be used to move data to the cloud, without re-factoring applications, by transforming data from file to object, and using file data with cloud-native applications that are designed for object data. www.qumulo.com