The new Pixotope 24.1.0 has many updates supporting virtual production workflows, including pipelines and tracking, plus a full integration of Unreal Engine 5.4 incorporating Motion Design.

Media playback now includes both 2D and 3D graphics.

The new Pixotope 24.1.0 release has a large number of updates supporting virtual production workflows, including pipelines and tracking, plus a full integration of Unreal Engine 5.4 incorporating Motion Design.

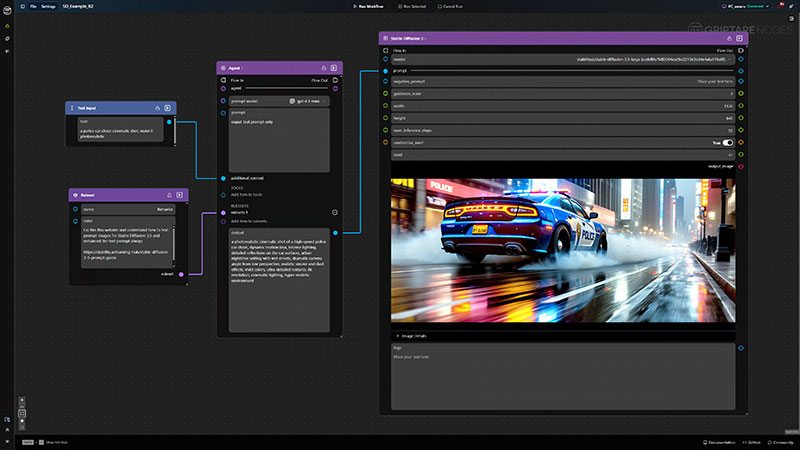

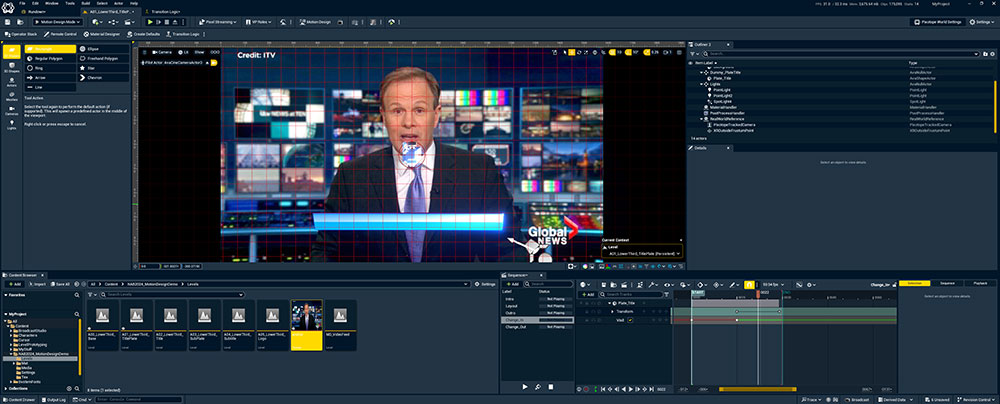

Motion and production graphics are a critical part of this release, and can now be produced directly in the engine itself, owing to Unreal Engine version 5.4’s new Motion Design Mode that includes specialised features for the 2D canvas and an upgraded world outliner (a hierarchical tree view of all scene elements). Users can author complex 2D motion graphics with tools ranging from 3D cloners, effectors and modifiers, to animators and others.

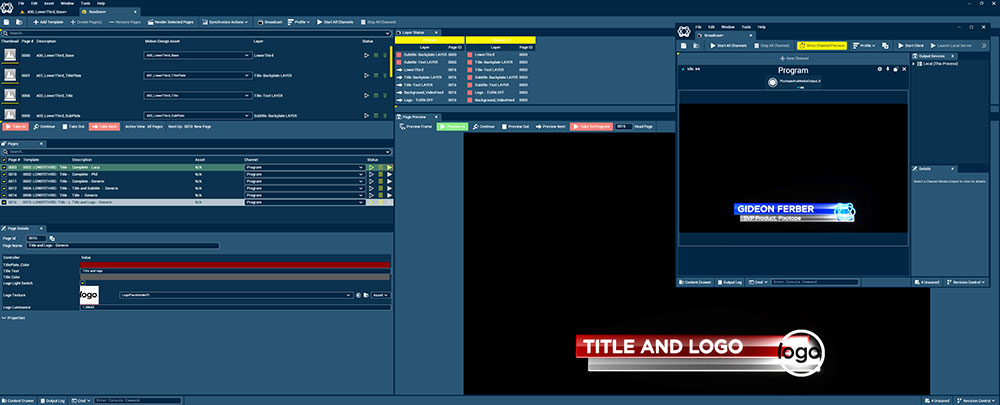

Pixotope Broadcast Graphics

Pixotope Broadcast Graphics is now a new product, developed with their partner Erizos and based on Erizos Studio, which supports Pixotope AR, VS, XR as well as Unreal Motion Design. Using Erizos Studio, producers can control Unreal Engine and create custom graphics in real time, while this functionality remains integrated into the broadcast workflow.

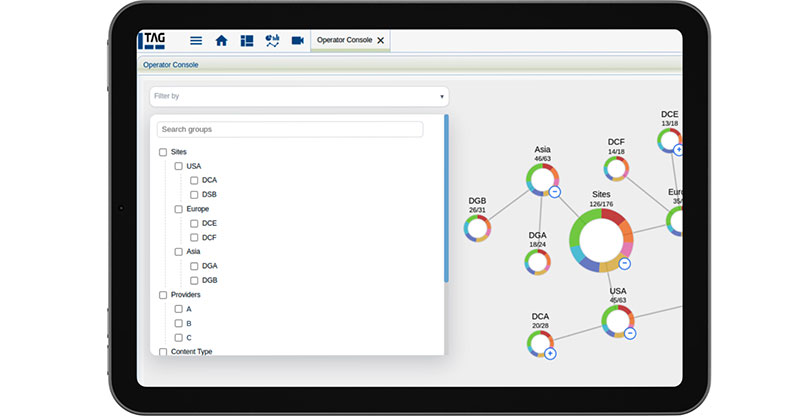

A series of engines – from Pixotope, VizRT, Singular.live as well as Unreal Engine – and multiple aspects of a project can be controlled simultaneously, using template-based workflows to drive CG overlay graphics, AR elements, a virtual studio and an XR wall. Integrating with newsroom control systems using the MOS protocol, users can also automate graphics playout, incorporating external data where required.

Pixotope Broadcast Graphics

The flexibility of Erizos Studio allows operators to focus on their production and story, without undue concern for the underlying software. That is because it is also simpler to learn than many engines – an operator only has to learn the one Erizos application, while controlling multiple platforms.

Animation

For animators, Motion Matching is an expandable framework for animation features. Instead of using complex logic to select and transition animation clips at runtime, it relies on searching a large database of captured animation using the current motion information of the character in game as the search key.

Character rigging is expanded with essential tools including a modular control rig, automatic retargeting, a skeletal editor and a deformer graph. For animation authoring, users have access to Unreal 5.4’s new Gizmos, reorganised Anim Details, improvements to the Constraints system and new Layered Control Rigs. The Sequencer has updates as well to improve readability of the Sequencer Tree, for instance, plus keyframe scriptability which opens the potential for customised animation tools.

Rendering

Nanite, Unreal’s virtualised geometry system that uses an internal mesh format and rendering to render extreme detail and high object counts, now supports dynamic tessellation so that fine details such as cracks and bumps can be added at render time. Nanite also supports spline mesh workflows, which are useful for creating roads on landscapes and similar features.

The AnimToTexture plugin now works with Nanite geometry. This plugin is used to create animated Static meshes that look and behave like Skeletal meshes, which is important in terms of performance optimisation because rendering animations for skeletal meshes is computationally very demanding.

Render performance in Unreal 5.4 is improved due to refactoring systems to increase parallelisation, as well as adding GPU instance culling to hardware ray tracing. This kind of culling uses the GPU instead of the CPU to exclude objects from rendering when they are occluded behind other objects.

Heterogeneous Volume Rendering integrates rendering of volumetric phenomena such as fire, smoke and fluids driven by Niagara Fluids or Open VDB files. In 5.4, shadow-casting includes support for Heterogeneous Volumes and integration with existing rendering passes, such as translucent objects.

Unified control for graphics platforms

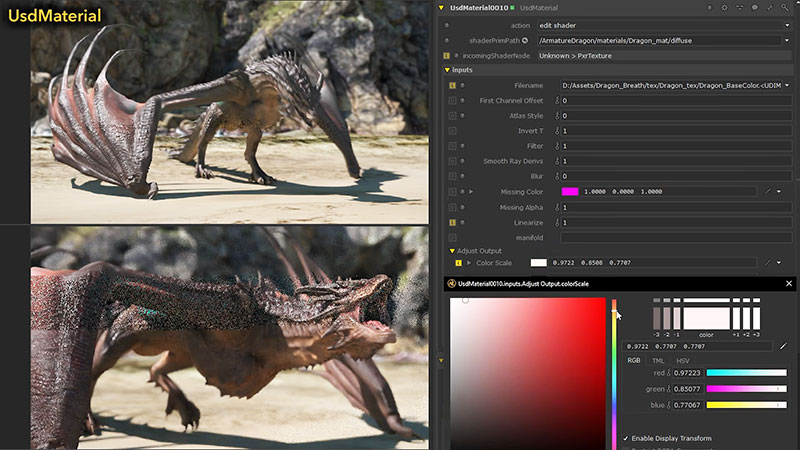

Colour Pipeline and Keying

Pixotope 24.1 helps simplify the task of setting up a colour pipeline, while addressing the underlying complexity for users, such as colour spaces, profiles, linear, log, gamut and HDR. A checklist is now included, with an overhaul of the colour documentation that covers the whole production pipeline and explains key concepts.

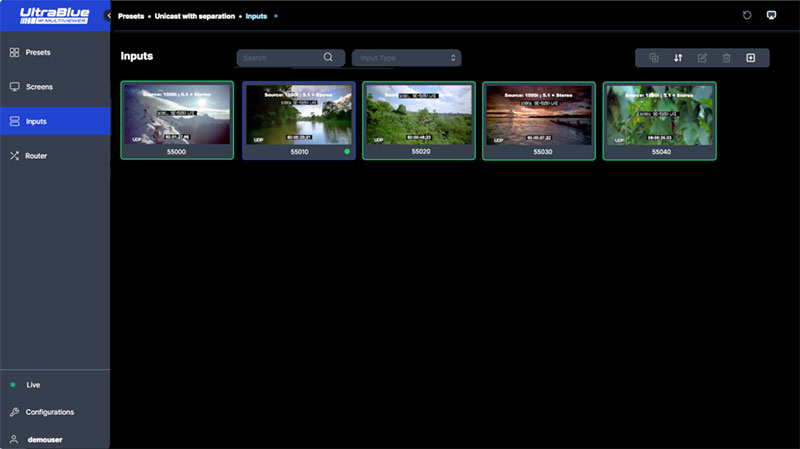

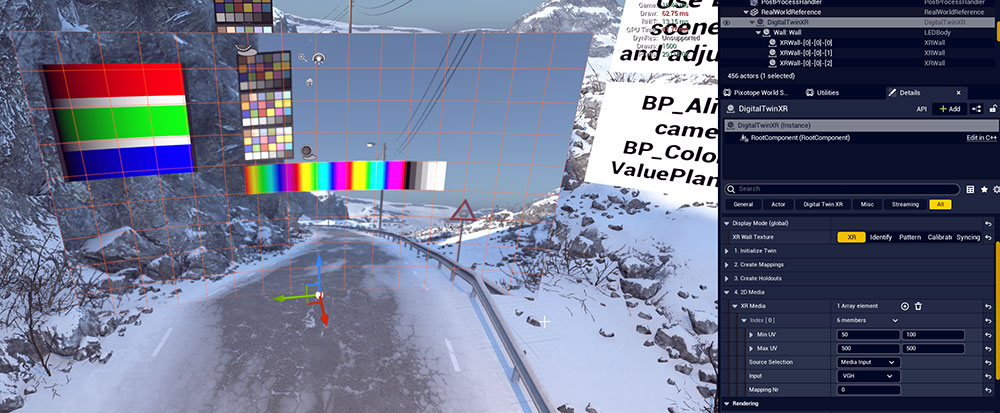

For users working with LED XR walls, media playback has been extended to include both 2D and 3D graphics, resulting in a more flexible way to handle changing production needs.

When producing imagery for hybrid studios working with AR content, where transitions occur between a virtual set replacing a green screen and a physical set, creating smooth transitions is important to the final look. Chroma Screen Actor defines the areas where the keyer will be active, managing keying more effectively and monitoring the quality of transitions between virtual and physical sets

Combined with the improvements to the colour pipeline, the keyer can now be used regardless of the native colour space or the one you are transitioning to.

Motion Design

Tracking and Mapping

Pixotope is now able to detect and calibrate delays between video and tracking automatically. Users only need to move the camera, while the system calculates the delay. Because synchronising the AR and XR machines can also create a challenge, a new XR syncing display mode is introduced to speed up the syncing process.

Making changes while working live is a key functionality. However, to make adjustments in Pixotope more transparent and avoid inconsistencies between render engines, in this update, live changes are stored centrally. Consequently, any render engine will reflect the latest changes, regardless of when it was added. If the workflow requires one or more render engines to use different settings, overrides can now be established.

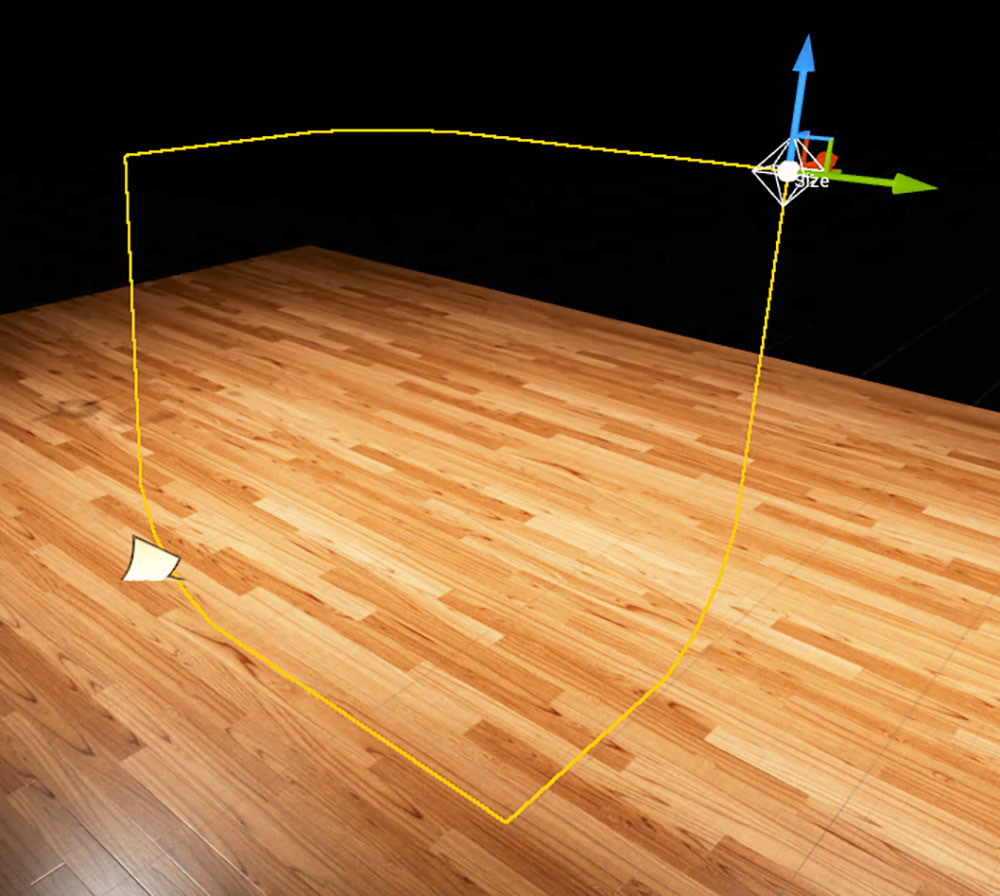

XR/Video Wall functionality has new options for mapping media inputs and generating manual twins – manually and visually adjustable representations of XR walls – increasing flexibility and control over XR setups. For example, users can map media inputs directly onto specific areas on the LED wall.

When creating a digital twin from highly detailed imported geometry, you can now manually merge XR walls to reduce their count and make the manual twin of your set-up easier to handle. For cases where the curvature of the LED wall is not regular, you can now use the Spline Twin tool to generate a manual twin based on a spline, also giving you granular control of its details. XR colour matching now allows limiting the LED colour profile to a specific mapping as well.

Defining an area for keying

With the Tracking Data SDI Embedder, you can embed tracking data directly into an SDI audio channel together with the video feed. This allows you to run a single SDI cable without separate cabling for tracking, saving on calibration time. De-embedding inside Pixotope Graphics is now supported natively.

As well as Pixotope Broadcast Graphics, two other new Pixotope products are available. To use live data from external sources, operators can use the Data Mapper to set up and link external sources to any destination inside Pixotope without needing blueprints. These sources can be RSS feeds, public APIs or Google sheets.

A failover option has also been added. This allows you to run a further failover server that will take over directly in case the main one is not responding. pixotope.com