Adobe Firefly generative AI models and features are impacting artists’ creativity and use of Creative Cloud applications, increasing efficiency and shortening the time to visualise ideas.

Adobe Firefly generative AI models and AI-powered features are impacting the use and development of Creative Cloud applications including Adobe Illustrator, Photoshop, Lightroom, Premiere Pro, After Effects and Adobe Stock, increasing artists’ efficiency and shortening the time it takes to visualise their ideas.

Following the commercial release of Generative Fill in Photoshop, Adobe is introducing other new Firefly-powered workflows on the understanding that AI is most powerful when integrated into creative workflows. These workflows are released alongside three new Adobe generative AI engines - Adobe Firefly Image 2 Model, Firefly Vector Model and Firefly Design Model.

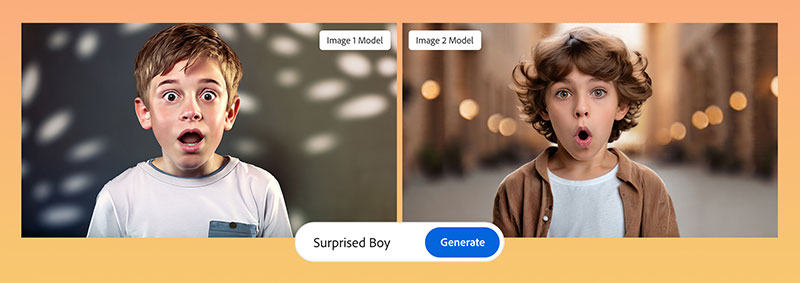

Adobe Firefly Image 2 Model

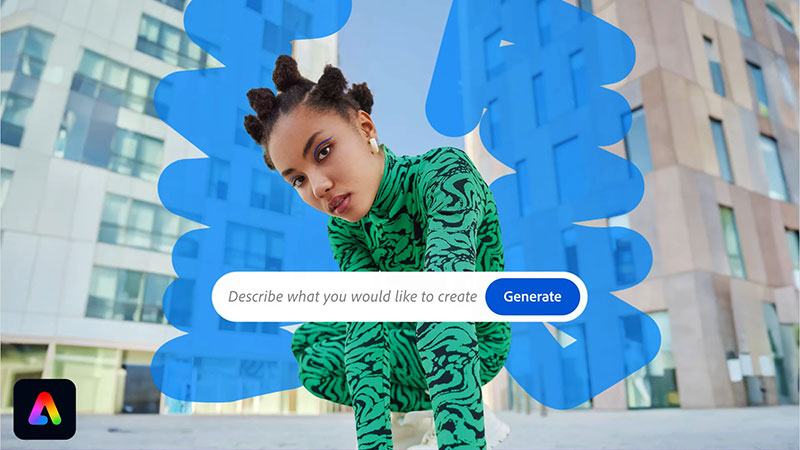

Firefly Image 2 Model updates Adobe’s generative AI for imaging, featuring changes that mainly concern creative control and image quality, including new Text to Image functionality now available in the Firefly web app. Generative Match generates content in custom, user-specified styles, Photo Settings makes photography-based image adjustments and Prompt Guidance helps users write prompts in a way that generates more satisfying results.

Firefly Image 2 also improves on the rendering quality for humans, in particular the skin, hair, eyes, hands and body structure. It makes a better selection of colours available, with high dynamic range, and gives users more control over their output. Like the initial Firefly model, Firefly Image 2 is trained on licensed content, such as Adobe Stock, and public domain content where the copyright has expired.

Style and Camera Effects

Generative Match is used to apply the style of another image, chosen by the user, to generate a series of new images. Users can either pick images from a pre-selected list or upload their own reference image to guide the style, and then generate new images through the Text to Image prompt in the Firefly web app.

This is an effective way to meet brand guidelines when a large number of assets is required. It saves time when designing from scratch by replicating the style of an existing image, and then maintains the look consistently across the assets. Adobe stores a thumbnail of the reference image on its servers while Generative Match automatically prompts users with an in-app message, requiring them to confirm they have rights to use uploaded images, and to agree to Adobe’s Terms of Use.

Producing and refing a lens blur and bokeh in Lightroom.

The Photo Settings in Firefly Image 2 can be used to enhance photorealistic qualities in images with high-fidelity details such as skin pores and foliage, and to gain greater control over depth of field, motion blur, field of view and generation style. Users can apply and adjust photo settings in a manner similar to working with manual camera lens controls, helping creators achieve their visions while saving time and resources.

Photo Settings can also be approached through modes. Auto Mode will now automatically select either ‘photo’ or ‘art’ as your image generation style, and then apply appropriate photo settings during prompting, assuring you of the right kind of results without trial and error.

Communicate with Firefly

Firefly Image 2, which supports over 100 languages, is now better equipped to understand text prompts and will recognise more landmarks and cultural symbols. However, it’s worth noting that these capabilities now work both ways. That is, users can receive suggestions from the model for improving prompts and auto-completion of prompts. While suggestions are intended to inspire new creations, reduce the need for re-generation and help generate attractive content faster, they are optional.

Prompt Guidance is a new feature that ‘teaches’ users to expand or reword prompts in a way that is likelier to arrive at the creator’s intended result. On the other hand, in the Text to Image module in the Firefly web app, users can select specific elements, such as key terms, colours or shapes, to leave out of image generations.

Users can share and save images directly from Firefly, as well as the prompts from the images they like, to fine-tune. In this way, with Share from Firefly, users can share their work and, at the same time, help others understand how to create a specific image.

Save to Library is a little different in that it facilitates cross-application workflows – users can save a Firefly file to Creative Cloud Libraries and then reopen it within other apps. This allows Firefly-generated outputs to be saved in a shared library for other people to open and use.

Changing aspect ratio with Generative Expand.

Adobe says that users have generated over 3 billion images with Firefly’s initial model since its beta launch in March. However since 90% of Firefly web app users are new to Adobe, it’s hard to anticipate what trends the usage will follow from here.

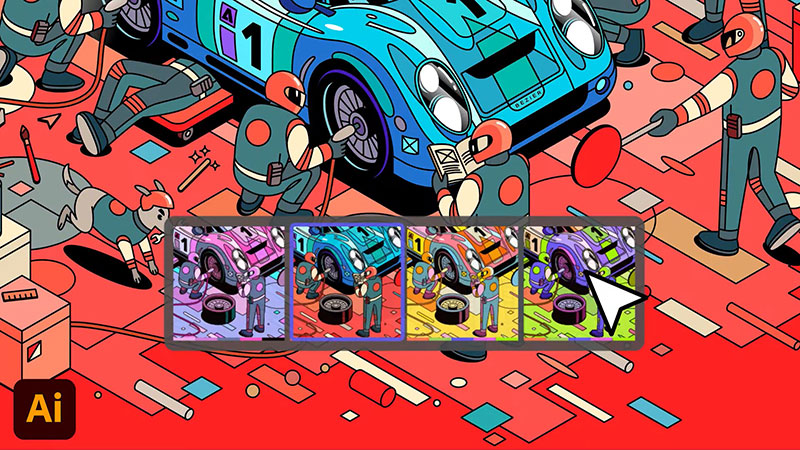

Firefly Vector Model

Firefly Vector Model is new and stands out as a generative AI model for vector graphics and pattern outputs. It expands Firefly into a new media type, making the new Text to Vector Graphic workflow possible in Illustrator. Now available in beta, Text to Vector Graphic generates a wide variety of vector graphics from regular text prompts. Based on the user’s short description of the desired subject, scene, icon or pattern, Illustrator generates multiple variations to explore. The outputs are compact and organised into groups, making them easier to edit and reuse for marketing and advertising graphic creation, inspiration and mood boards.

When the vector graphic is generated, all of the elements are logically grouped and layered. Artwork of a tree for example will automatically group all the related paths making up the tree to make it convenient for users to tweak, edit and re-use the final vector output. The vectorized patterns from the model can be tiled invisibly and repeated infinitely without visible gaps or overlaps between the repeats. High-quality vector curves in the output are smooth and precise, with attention to detail. The junctions where multiple curves intersect are handled intelligently, as they occur.

Use text to generate vector graphics.

Like Firefly Image 2 Model, the Vector Model also has a Generative Match feature to make sure that the vectors you are creating for an artboard or project all match the existing style, and it allows you to produce consistent specialised content.

Traditionally, gradients have been a challenge when creating vectors, but the model’s new engine generates editable vector gradients using much simpler geometry than the usual method of creating a series of solid-filled paths to depict the same image effect.

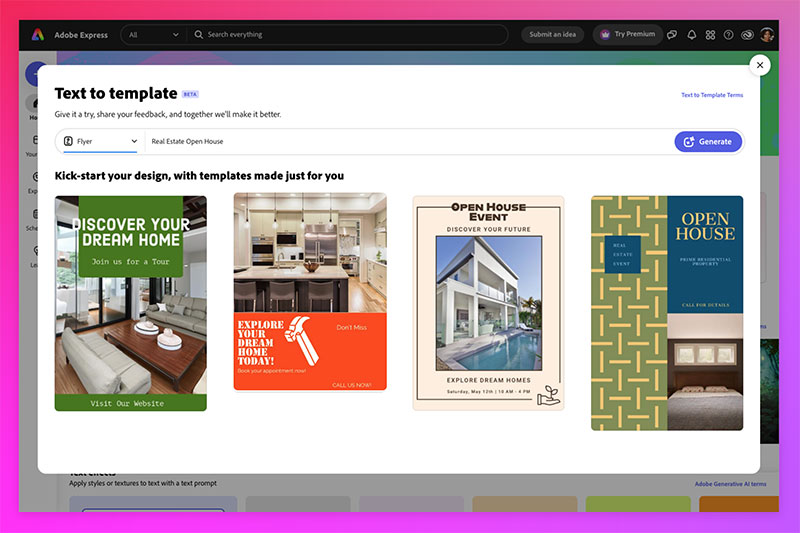

Firefly Design Model

The new Firefly Design Model is the engine behind the new Text to Template workflow in Adobe Express, used to instantly generate template designs by combining its layout system with the Firefly Image Model, Adobe Stock and Adobe Fonts. For instance, users can generate images in Firefly and then carry over prompts to continue refining in Express, using Firefly-powered Text Effects to enhance titles. The templates can suit different aspect ratios, are fully editable in Express and can be used for print, social and web advertising.

AI-generated templates, and the ability to use auto-generated text and images, save users time sifting through the thousands of Express templates and helps identify layouts that will work best for a project. From there, users can generate text and images using prompts, while choosing the best text size, colour and position, and then crop, replace or rotate images as needed. All of these features can help artists develop an efficient approach to personalised marketing, which remains a challenge.

AI APIs

Adobe and NVIDIA recently announced plans to make Firefly available as APIs in NVIDIA Omniverse, a platform for Universal Scene Description (OpenUSD) workflows, enabling developers and designers to simplify and accelerate their workflows.

More AI Workflows

Returning to the idea that AI is most powerful when integrated into creative workflows, Adobe has developed AI-driven functionality for several of its applications.

As well as the new Firefly-powered Text to Vector Graphic workflow beta in Adobe Illustrator and Text to Template in Express, both described above, Adobe released Generative Fill in Photoshop in March 2023 to make it easier for artists to add, remove or expand content in images, directly in Photoshop, with text prompts in natural language. The canvas can be expanded and aspect ratios changed with Generative Expand, also powered by Firefly.

In Adobe Lightroom, the new Lens Blur adds an aesthetic blur effect to a photograph, without first shooting with a specialised camera lens. The user adjusts the depth in the photo by making a depth map, using Adobe Sensei. Once the blur is applied, you can adjust the strength of the effect, select a Bokeh effect or adjust the brightness of out-of-focus light sources. You can also leave the focus on the subject, or change it to another point.

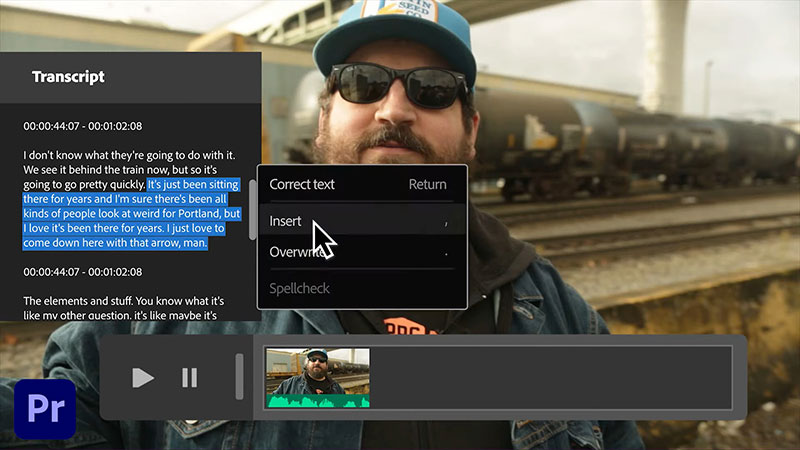

AI-powered Text-Based Editing in Adobe Premiere Pro starts with generating a transcript of the source media and then adding video clips to the timeline by locating and selecting them within the transcript.

For After Effects users, separating a foreground object from a background is a key function – when you have created a matte that isolates an object, you can replace the background, selectively apply effects to the foreground and so on. To do this automatically, artists can use Roto Brush to draw strokes onto the foreground and background elements. After Effects then uses that information to create a segmentation boundary between the foreground and background elements.

The most recent version of Roto Brush is Next-Gen Roto Brush, powered by the new AI model. It is more precise during the selection and tracking of objects in footage over time and space, which makes masking complex objects like overlapping limbs, hair and transparencies quicker and simpler. Text-based Editing in Premiere Pro and Roto brush in After Effects are now generally available following their beta stages earlier in the year. www.adobe.com