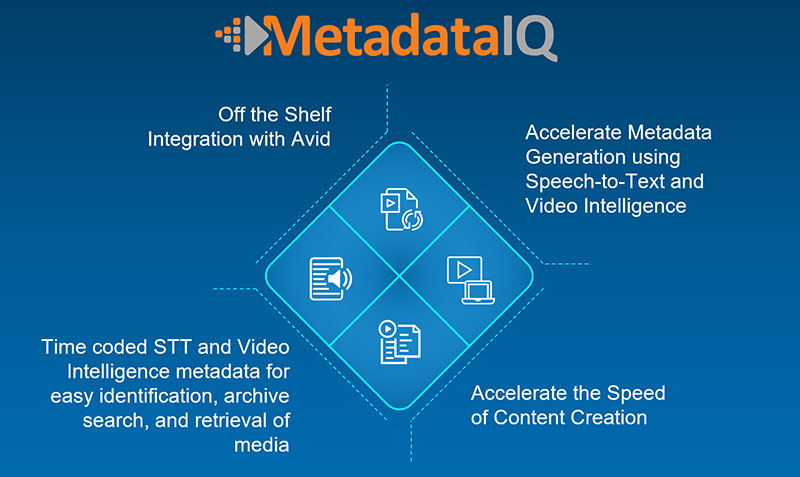

All Avid Media Composer/MCCUX users are now able to employ MetadataIQ to extract media and insert speech-to-text and video intelligence metadata as markers within an Avid timeline.

Digital Nirvana MetadataIQ, an SaaS-based tool that automatically generates speech-to-text and video intelligence metadata, now supports Avid CTMS APIs. As a result, video editors and producers can now use MetadataIQ to extract media directly from Avid Media Composer or Avid MediaCentral Cloud UX (MCCUX) rather than having to first connect with Avid Interplay, which Avid has discontinued.

This capability will help broadcast networks, post production houses, sports organisations, houses of worship and other Avid users that do not have Interplay in their environments to benefit from MetadataIQ.

Speech-to-Text and Video Intelligence Metadata

Previously, only Avid Interplay users were able to employ MetadataIQ to extract media and insert speech-to-text and video intelligence metadata as markers within an Avid timeline. Now, all Avid Media Composer/MCCUX users will be able to do this. They will also be able to use MetadataIQ to ingest different types of metadata, such as speech to text, facial recognition, OCR, logos and objects. Each type will have customisable marker durations and colour codes to help with identification.

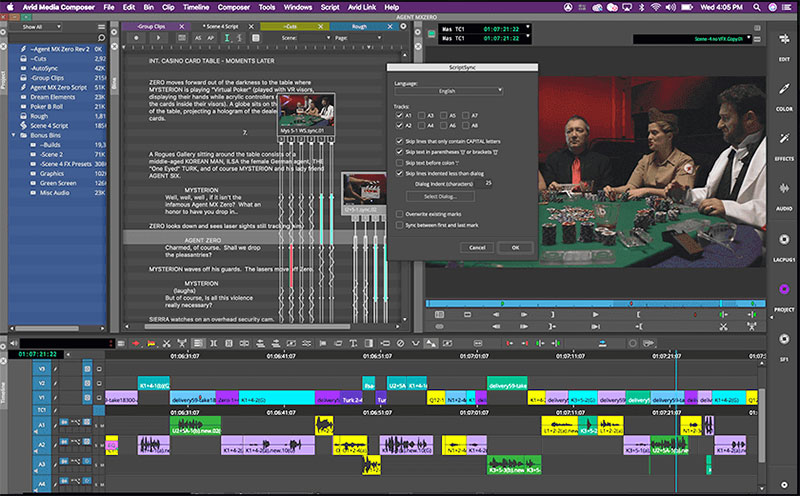

It will be possible to submit files without having to create low-res proxies or manually import metadata files into Media Composer/MCCUX, and submit media files automatically to Digital Nirvana’s transcription and caption service to receive high quality, human-curated output. Submitting data from MCCUX into Digital Nirvana’s Trance will also be possible. Trance generates transcripts, captions and translations in-house and publish files in all industry-supported formats.

These capabilities are expected to greatly improve workflows for Media Composer or MCCUX users. For example, raw camera feeds and clips can be ingested to create speech-to-text and video intelligence metadata, which editors can consume in real time if required. Editors can type a search term within Media Composer or MCCUX, identify the relevant clip and start work. For certain shows – such as reality TV, on-street interviews and so on – the machine-generated or human-curated transcripts can be used in the script-generation process.

Post Production

Post production teams can submit files directly from the existing workflow to Digital Nirvana to generate transcripts, closed captions and subtitles, and translations. Then the team can either receive the output as sidecar files or ingest it directly back into Avid MCCUX as timeline markers.

Avid Media Composer Ultimate

If the post production team includes in-house transcribers, captioners or translators, editors can automatically route the media asset from Avid to MetadataIQ to create a low-res proxy, generate speech to text, and present it to the in-house team in Digital Nirvana’s Trance interface. There, users get support from artificial intelligence and machine learning for efficient captioning and translation.

With timecoded logo detection metadata, sales teams can get a clearer picture of the total screen presence of each sponsor and advertiser. For VOD and content repurposing, the video intelligence metadata helps to accurately identify advertising spots and helps with further brand/product placement and replacement. www.digital-nirvana.com