NVIDIA EGX Platform Brings AI Applications to the Edge with Kubernetes

New 5G infrastructure gives organisations an opportunity to take AI models outside of data centres to the edge.

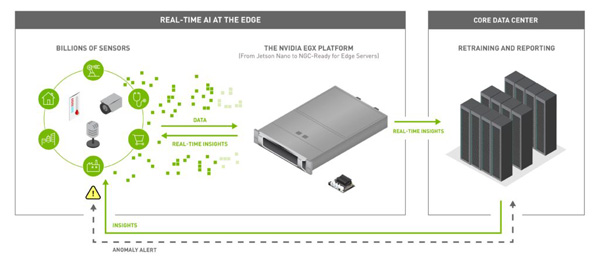

Organisations that have started using AI engines to solve problems, answer questions and search, also need to decide where to deploy their AI models to get the best performance. According to NVIDIA, the convergence of AI, the Internet of Things and the new 5G infrastructure gives teams an opportunity to take their models outside of data centres to the edge, where the data is being collected and where billions of sensors are streaming data, to output results in real time.

Large enterprises deploying AI workloads at scale are using a combination of on-premises data centres and the cloud for compute power. But deploying workloads of this size at the edge, for example, in retail shops or parking garages, can be very challenging if the kind of IT expertise found in data centres is not available.

The Kubernetes open-source system for handling containerised applications can help by removing many of the manual processes involved in deploying, managing and scaling applications. It groups the containers that make up an application into logical units to make management and discovery easier. It also gives companies a cloud-native way to deploy applications consistently across on-premises, edge and cloud environments.

Simplifying AI Applications

But again, setting up Kubernetes clusters to manage hundreds or thousands of applications across remote locations can be cumbersome in itself, especially when specialists are not readily available at every edge location. NVIDIA means to address these challenges through the NVIDIA EGX Edge Supercomputing Platform.

NVIDIA EGX is a cloud-native, software-defined platform designed to make large-scale hybrid-cloud and edge operations possible and efficient. Within the platform is the EGX stack, which includes an NVIDIA driver, Kubernetes plug-in, NVIDIA container runtime and GPU monitoring tools, delivered through the NVIDIA GPU Operator.

The code in this operator defines workflows and other information the Kubernetes system needs to automate lifecycle management of containerised applications. NVIDIA, Red Hat and others in the cloud-native community have collaborated on the GPU Operator, a cloud-native method of standardising and automating the deployment of all necessary components for GPU-enabled Kubernetes systems.

The GPU Operator also allows IT teams to manage remote GPU-powered servers in the same way they manage CPU-based systems, reaching a pool of remote systems with a single configuration file and running edge AI applications without experts on-site.

NVIDIA Aerial (below) runs on NVIDIA EGX platform.

Red Hat and NVIDIA

The GPU Operator results from a continuation of NVIDIA’s and Red Hat’s initial collaboration to accelerate adoption of Kubernetes in enterprise data centres, to now develop high-performance, software-defined 5G wireless infrastructure, running on Red Hat OpenShift, to the telecom industry.

The collaboration, announced by NVIDIA founder and CEO Jensen Huang during his keynote at Mobile World Congress in Los Angeles, can help telcos transition to 5G networks capable of running various software-defined edge workloads. Their work will initially focus on 5G software-defined wireless radio access networks (RAN), intended to make AI-enabled applications more accessible at the telco edge.

Their collaboration means that customers can use the NVIDIA EGX platform and Red Hat OpenShift to more easily deploy NVIDIA GPUs to accelerate AI, data science and machine learning at the edge. Red Hat OpenShift supplies enterprise-grade, production-ready Kubernetes systems to manage and automate the Aerial 5G RAN, container network functions and other new edge services.

Low-latency AR/VR streaming over 5G networks to devices.

“The next generation of mobile networks won’t be defined by inflexible, proprietary solutions — it will be founded in open cloud-native technologies,” said Jim Whitehurst, president and CEO of Red Hat. “With our experience in building open telecommunications infrastructure, we see a tremendous advantage to running standardised software at the telco edge, helping to enable a range of new workloads via dynamically scalable services.”

By running 5G RANs on a cloud-native platform, telcos can use their network investments more efficiently and bring new services, such as AI, AR, VR and gaming, to a wider market. Because it hasn’t been possible to reconfigure traditional systems so quickly, a high performance network architecture of this kind can make a significant difference for telco operators in terms of flexibility and differentiating themselves.

In these ways, the 5G virtualised RANs that run in the wireless infrastructure closest to customers are critical to building a 5G infrastructure capable of running applications that are dynamically provisioned on a common platform, and are suited to offering AI services at the edge.

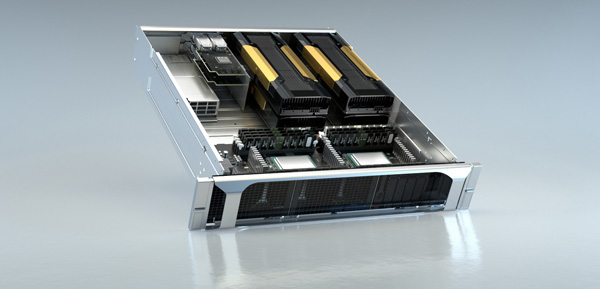

EGX hardware

NVIDIA Aerial Accelerating 5G on NVIDIA GPUs

Another new element NVIDIA has developed to help 5G operators to move to cloud-native infrastructure is Aerial, a software developer kit for providers who want to build and deliver their own 5G wireless RANs. The kit comprises two paths - a low-latency data path directly from Mellanox network interface cards to GPU memory, and a 5G physical layer signal-processing engine that keeps all data within the GPU’s high-performance memory.

The result is two Aerial SDKs - CUDA Virtual Network Function (cuVNF) and CUDA Baseband (cuBB) - to simplify building highly scalable, programmable, software-defined 5G RAN networks using off-the-shelf servers with NVIDIA GPUs.

The NVIDIA cuVNF SDK optimises input/output and packet processing, sending 5G packets directly to GPU memory from GPUDirect-capable network interface cards.

The NVIDIA cuBB SDK establishes a GPU-accelerated 5G signal processing pipeline, including cuPHY for L1 5G Phy, with very high throughput and efficiency by keeping all physical layer processing within the GPU’s high-performance memory.

The NVIDIA Aerial SDK runs on the NVIDIA EGX stack, bringing GPU acceleration to Kubernetes infrastructure. To simplify the management of GPU-enabled servers, telcos can install all required NVIDIA software as containers that run on Kubernetes.

NVIDIA Aerial is available now to early access partners, and general availability is expected by the end of 2019. www.nvidia.com