NVIDIA Omniverse scalable USD-based platform for virtual world developers, now has an Avatar Cloud Engine, an AI 3D search engine, updated PhysX and MDL, and other upgrades.

NVIDIA has released a new range of developer frameworks, tools, apps and plugins for NVIDIA Omniverse, the scalable computing platform for building and connecting virtual worlds based on Universal Scene Description (USD).

Among the new software are several AI-powered tools and features that enhance the ability of artists, developers and engineers to build virtual content more easily, and connect directly to mainstream 3D applications, including PTC Creo, SideFX Houdini, Unity and others from the Siemens Xcelerator platform, an open business portfolio of IoT-enabled hardware and software supporting digital transformation.

So far, Omniverse, a multi-GPU platform, is in use by around 700 companies globally to enhance architectural and product design, simplify VFX workflows and build digital twins of factories, cities and the planet. The idea is that by building and operating physically accurate virtual representations, scenes and 3D models in Omniverse software, from the data centre, project teams can collaborate and communicate effectively from wherever they are located. Expected advantages are greater innovation, creativity and efficiency.

Regarding the logistics of making this kind of work possible, Rev Lebaredian, vice president of Omniverse and simulation technology at NVIDIA said, “The metaverse is an opportunity that organisations know they can’t ignore, but many struggle to see a clear path forward for how to engage with it. NVIDIA Omniverse closes the gap between the physical and virtual worlds.”

New Tools and Frameworks on the Platform

![]()

Avatar Cloud Engine

NVIDIA’s main new release is the Omniverse Avatar Cloud Engine (ACE), a suite of cloud-native AI models and services for building and deploying lifelike virtual assistants and digital humans. The Avatars are suitable for games, chatbots, digital twins, virtual worlds and similar applications.

ACE is based on NVIDIA’s Unified Compute Framework, which gives users access to the software and APIs needed to apply the many skills involved in creating highly realistic, interactive avatars. These include NVIDIA Riva for developing speech AI applications, Metropolis for computer vision and intelligent video analytics, Merlin for high-performing recommender systems, NeMo Megatron for large language models with natural language understanding, and NVIDIA Omniverse for AI-enabled animation.

Using the UFC is mainly about combining optimised microservices into real-time AI applications. Every microservice has a context – like vision or conversational AI, data analytics, graphics rendering -- and can be independently managed and deployed within the application. Because each context is abstracted from the application, prior knowledge of that context and the platform is unnecessary.

Other platform updates affect the set of tools for building native Omniverse extensions and applications, called Omniverse Kit. They include major updates to PhysX, the physics simulation system used in applications on devices, from smartphones up to multicore CPUs and GPUs. In Omniverse, real-time, multi-GPU, scalable soft body and particle cloth simulation are now possible, enhancing the physical accuracy of virtual worlds and objects.

Audio2Face

The Kit also has new OmniLive workflows, overhauling USD-based collaboration in Omniverse that increases the speed and performance of various app 3D workflows, and enabling non-destructive USD workflows to ease collaboration between artists and developers.

A new AI tool now in beta, Omniverse Audio2Face, can create facial animations directly from an audio file. It gives users who are working with AI-driven animation a new ability to infer and generate realistic emotions from voices, and animates all facial features. You begin by animating NVIDIA’s preloaded 3D character model with your audio track, and then retarget the results to your own characters.

Omniverse Machinima is an app for building 3D cinematics and animated films while collaborating in real time to manipulate characters and environments inside virtual worlds. It includes tools for rendering, materials and lighting, VFX and motion capture, plus AI animation tools like Audio2Face (above) and Audio2Gesture, an AI-based tool that generates realistic arm and body motion from an audio file.

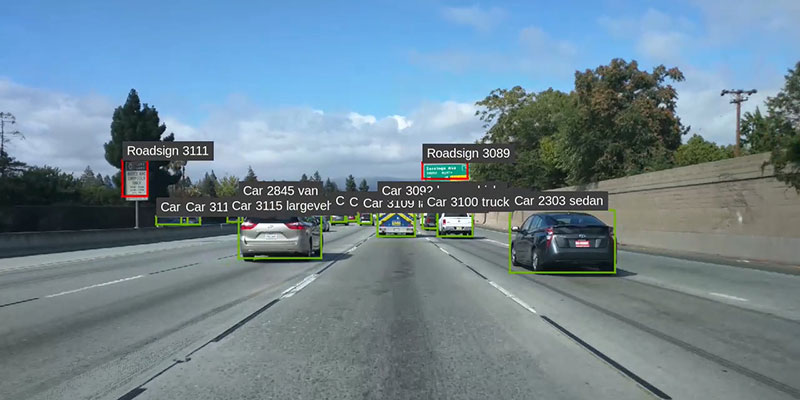

For Omniverse Enterprise teams, Omniverse DeepSearch is now available on the platform. It gives users access to AI to help make more accurate searches through massive, untagged 3D asset databases of visual assets using natural language keys. DeepSearch is proving to be a tremendous advantage for game developers or VFX studios, for example, who have hundreds of thousands of untagged assets to search through.

AI analytics

Working with Modulus

The NVIDIA Modulus physics machine-learning framework can be accessed now as an Omniverse Extension. NVIDIA says Modulus-trained Physics ML models can achieve near-real-time performance, depending on the application, while maintaining accuracy closer to high-fidelity simulations. Its primary value comes from its ability to be generalised – that is, applied to different domains and use cases – from engineering simulations to life sciences, and its ability to scale from single-GPU to multi-node implementations.

Modulus has a parameterised system representation that solves for multiple scenarios in near real time, which means you can train once offline, and then infer repeatedly. Its AI Toolkit comprises building blocks for developing physics machine learning surrogate models that combine physics and data. The Modulus APIs allow domain experts to work at a higher level of abstraction, making it extensible to new applications with detailed reference applications serving as starting points. All of these features have made it a foundation for scientific digital twins.

USD Connectors

As part of a collaborative effort with its partners in the industrial, design, simulation and CAD software ecosystems, NVIDIA is introducing 11 new Omniverse Connectors, which are USD-based plugins. Newly available in beta are Connectors for PTC Creo, Visual Components and SideFX Houdini. NVIDIA announced the ongoing development of Connectors for Blender, Autodesk Alias and Autodesk Civil 3D, Siemens JT, SimScale, Open Geospatial Consortium and Unity.

These Connectors are anticipated to open up further metaverse workflows for manufacturing, engineering and design companies. They bring the total number of Connectors in the Omniverse USD ecosystem to 112.

Simulation Systems

NeuralVDB

NVIDIA has made significant changes to the core simulation systems that represent materials, physics and light in metaverse worlds.

NVIDIA MDL, which has been in use for 10 years as the material standard for physically accurate representation of 3D materials, is now fully open sourced so that developers can bring material definition language support to any renderer. Its SDK has C++ and Python APIs that integrate physically-based materials into rendering applications.

NeuralVDB will soon come to beta as the next evolution of OpenVDB, bringing AI and GPU optimization to sparse volume datasets. It is expected to reduce the memory footprint of such massive datasets by up to 100x. When VDB data is encoded into NeuralVDB representations, it passes through a compressor designed to preserve information and temporal coherency between video frames in a way that allows the NeuralVDB representations to be decoded back into standard VDB volumes. www.nvidia.com