New York City Marathon Races Ahead with IP/Bonded Cellular Network

The TCS New York City Marathon is the world’s largest marathon, organised by the New York Road Runners (NYRR) community running organisation. The race course begins in Staten Island and continues through Brooklyn, Queens and The Bronx to finish at Central Park in Manhattan. Started in 1970 and run every year except 2012, the Marathon is now in its 49th year and was held in 2019 on 3 November.

Live event productions specialist CP Communications has managed content acquisition for the race for 25 years. Over the past four years, as bandwidth and network availability have improved, the crew has gradually relied less on traditional RF systems to cover the race, and instead started using more cost-efficient IP and bonded cellular systems. This year, they completed their transition, using an entirely IP and bonded cellular networking infrastructure for live coverage of the Men’s and Women’s races across all five boroughs.

Mobile and Rooftop Acquisition

The company brought back many of the same products and systems that have proved successful in recent years, while making changes to the technical infrastructure and production workflows in order to make sure their coverage was reliable throughout the 42.2km course. By using the fibre network now in place throughout NYC, CP Communications could increase flexibility at all locations, and further reduced reliance on traditional video transmission circuits.

Four cars and three motorcycles were equipped with stabilised mounts and lenses – Sony HDC P1 cameras were mounted on the cars and HDC-2500s on the motorcycles. A POV camera for talent was installed on two of the cars.

Mobile Viewpoint Agile Airlink encoder/transmitters captured and streamed the live action from the cameras, encoded as H.265/HEVC video, via bonded cellular connections back to CP Communications’ production truck HD-21 located at the finish line. Agile Airlink can transmit up to four videostreams in H.265, a bandwidth-efficient codec, and bonds eight combined 3G/4G connections to raise live streaming speed and quality. The H.265 compression also saves on datacard usage, and it is possible to add WiFi and Ethernet connections to AirLink to increase the upload capacity.

As well as the vehicles, Agile Airlinks were used on two rooftop locations in Brooklyn and Manhattan, and at three remote production locations in Brooklyn, Manhattan and the Bronx. For cellular-congested areas at the start and finish lines, the rooftop sites used traditional microwave links as backup transmission. The Agile Airlinks at the remote sites captured live interviews with racers, NYRR spokespeople and other subjects over the course of the day.

Dynamic Signal Routing

New equipment that CP Communications added this time was a Mobile Viewpoint 2K4 playout server at each rooftop and remote site so that technical personnel could route any signal between any of those five locations. The same system was added to each vehicle, which CP Communications Technical Manager Frank Rafka described as important for this event because it meant the team could send IFB return video to each vehicle and remote site and communicate directly with talent across all locations.

“Having return video to the cars over the entire course was one of the biggest changes to the infrastructure this year and a major benefit of managing IP encoding in the cloud. We had a valuable return system to move video, audio and other signals between all of our acquisition points,” said Frank. “We could directly monitor all locations and dynamically make changes as necessary. The hotspots and cellular networks available to Mobile Viewpoint made it simple to achieve."

CP Communications CEO Kurt Heitmann said, “The 2K4 playout servers are essentially the final decode point in the truck, where all of our inputs end up. From there, we supply an IP return to the rooftops. Only for the Bay Ridge rooftop location, which has no traditional service over part of the nearby bridge, do we have to set up traditional microwave.

“Otherwise we move the signals to bonded cellular and IP, and at the truck, the 2K4 servers allow us to remain in an IP world. Sending return video to the rooftops over IP allows the producers to accommodate QC during the race. We also send IP return video to each of the cars so that the talent can see the program when they perform.”

Audio Management

Microphone capture was embedded locally at each site, allowing all the audio to remain in lip sync with the video. “Whenever external audio is managed and mixed in a microwave set-up, it needs to be connected separately to the transmitter - in the case of RF microphones in this scenario, the connection is made with a regular XLR input,” Kurt said.

“However, while only one or two channels are available on a microwave, now with bonded cellular, we can manage eight audio channels per video stream. Because we can embed all of that audio into the video stream locally, and then transmit everything back to the truck over IP, the audio is perfectly timed with the video. All lip sync issues are eliminated.”

Vehicle and remote audio were managed and delivered to the broadcasters via an Audinate Dante network, supporting standards-based routable IP transport, that was managed on board HD-21. CP Communications used RTS OMNEO intercoms to link HD-21 to the host broadcaster, allowing direct communications throughout the TV broadcast compound. OMNEO networking also uses standard IP Ethernet and Dante transport. Devices with OMNEO integrated can be assembled into a large network, controlled under one common system, and exchange synchronised, multichannel audio.

Separately, CP Communications used a Unity Intercom system deployed over the wider cellular network so that NYRR personnel and spotters along the course were able to communicate with the broadcast compound.

“We changed intercom systems for this network because it needed a mobile system, like Unity,” said Kurt. “We can talk to all the spotters and drivers, who talk to the camera operators - anybody moving anywhere around the course can be added to a Unity cell-phone based intercom system. OMNEO, on the other hand, is a hardware intercom protocol, and since you can’t really hardwire a course like this, we use the cell phone system instead as a practical way to communicate.

Ready for Broadcast

As the project’s content acquisition company, CP Communications’ responsibility was to acquire all the content off the course – from the cars, motorcycles and remote sites – and then convert and route the baseband signals to NEP, who suppied the broadcast production truck and services. All video and audio signals were delivered from HD-21 to an NEP broadcast truck at the finish line, using a MultiDyne VF-9000 fibre transmission system.

Kurt said, “We had the Mobile Viewpoint encoder and server to move HD SDI into an IP world, get it off the rooftops and keep it in IP. Then for the handoff to NEP, which again needs baseband HD SDI, we decoded the signals in HD-21 and transmitted it to the NEP truck in baseband, using the MultiDyne VF-9000 fibre transmission system.”

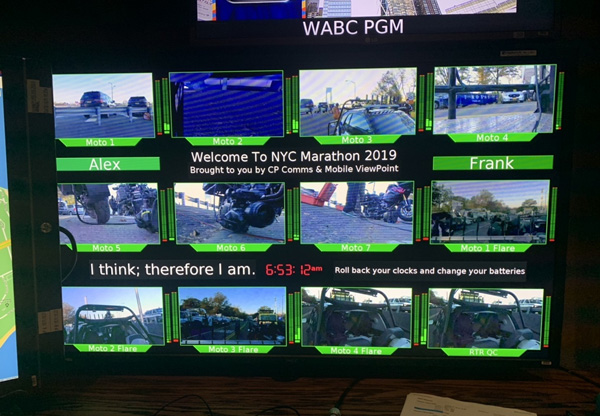

On board HD-21, CP Communications personnel monitored bitrates and dataflow from all of the Agile Airlink devices, as well as signals leaving the truck through its 24x24 routing infrastructure. NEP produced world feed uplinks along with the domestic national broadcast for ESPN2.

As a further source of real-time information, CP Communications’ team wrote software to interpret data and GPS locations from inbound signals from 28 tracking vehicles to HD-21. When transposed over a Google map, the code visualised geographic information from those vehicles, showing exactly where they were as the race moved into areas and zones with more challenging cellular coverage. cpcomms.com