Akamai’s new service based on NVIDIA’s RTX 4000 GPU improves the efficiency and economics of compute resources for M&E companies that need to process more content, faster.

Akamai has added a new service, based on NVIDIA GPUs and optimised for media-specific applications, to its cloud portfolio. Using the NVIDIA RTX 4000 Ada Generation GPU, the new service intends to improve the productivity and economics of computing resources for media and entertainment companies that are challenged by the need to process video content faster and more efficiently.

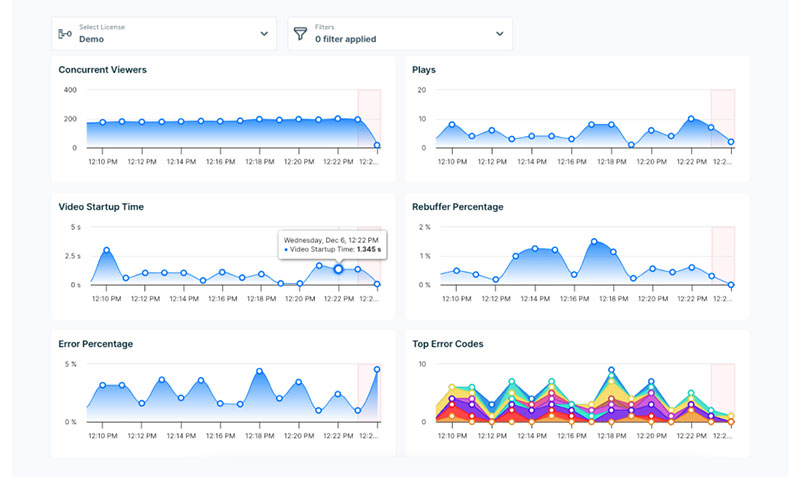

Akamai says that internal benchmarking they have conducted shows that GPU-based encoding using the NVIDIA RTX 4000 can process frames per second (FPS) 25x faster than traditional CPU-based encoding and transcoding methods. The company believes these results present a significant advantage for streaming service providers as they address their typical workload challenges.

Using the new service, M&E companies can build scalable, resilient architectures and deploy workloads that will be faster, more reliable and portable, while using Akamai’s distributed cloud platform and integrated content delivery and security services.

“Media companies need low-latency, reliable compute resources that maintain the portability of the workloads they create,” said Shawn Michels, Vice President of Cloud Products at Akamai. “NVIDIA GPUs may result in a better price / performance result when deployed on Akamai's global edge platform. Together with our Qualified Compute Partners program and open platform, customers have the capability to set up cloud agnostic processing workloads that support multicloud architectures.”

Akamai’s Compute Partners build, run and secure their applications on Akamai Connected Cloud, a globally distributed edge and cloud platform with continuous computing power that helps to enhance interoperability. Partners complete a qualification process to ensure the applications are readily available to deploy and scale across Akamai’s Cloud.

Industry Optimised GPUs

In a market primarily using NVIDIA GPUs to support large language modelling, Akamai’s GPU service focusses on the media industry. Jay Jenkins, Chief Technology Officer, Cloud Computing for Akamai talked about developing the service. “We have found that media companies are currently under-served by existing services, which are largely pitched at other industries. They can be expensive and may not be an optimal fit in terms of price for performance,” he said.

“Akamai has years of experience with customers in the media industry. We're continuously working and speaking with them as partners to develop new products that will enable them to build scalable, resilient architectures and deploy faster, more reliable and portable workloads. Combining our infrastructure with the NVIDIA RTX 4000 results in a full stack of media and entertainment capabilities from the edge, to storage and back again.”

A single-slot GPU for professionals, the NVIDIA RTX 4000 GPU reaches the speed and power efficiency necessary to perform in creative, design and engineering workflows in digital content creation, 3D modelling, rendering, inferencing and video content streaming – from the desktop.

It is also able to access the latest-generation RT Cores, Tensor Cores and CUDA cores along with 20GB of GDDR6 graphics memory, which makes the GPUs an advantage for processing content such as virtual reality (VR) scenes and augmented reality (AR) elements. VR and AR applications require 3D graphics and multimedia content to be rendered in real time.

In the gaming industry as well, GPUs are widely used mainly for fast, high-quality 3D graphics rendering and other tasks related to video game development.

Streaming – Faster-Than-Real-Time Transcodes

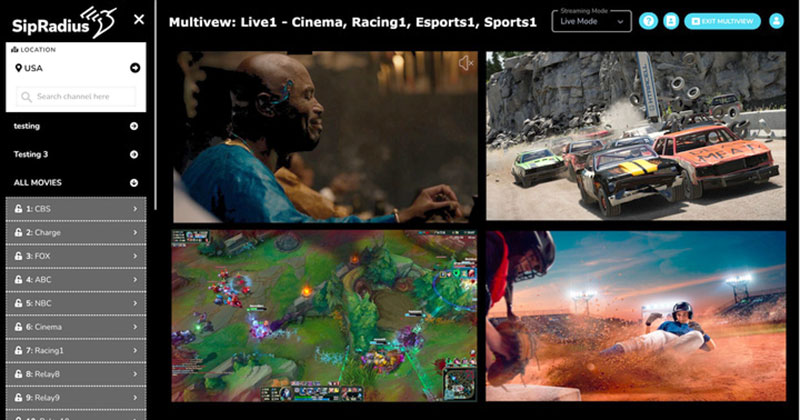

For streaming applications, GPUs can perform faster-than-real-time transcoding of live video streams, improving the streaming experience by reducing buffering, as well as playback, while GPU-based encoding is more efficient and reduces processing times compared with traditional CPU-based transcoding.

The RTX 4000 is equipped with new generation NVIDIA NVENC and NVDEC. These features are built into NVIDIA graphics cards to perform video encoding and decoding, in effect off-loading these compute-intensive tasks from the CPU to a dedicated part of the GPU.

This function allows extra capacity for simultaneous encoding and decoding tasks, which is critical for applications such as live streaming that need high-throughput video processing. The eighth-generation NVENC engines support the modern, up to date video codecs, including the very efficient AV1, that can deliver higher-quality video at lower bitrates.

Wider Applications

While Akamai optimised the new GPU service for the media market, it also serves developers and companies who want to build apps associated with other industry use cases. These include generative AI and ML, one of the primary applications of GPU cloud computing. GPUs are well-suited for tasks such as training and inference with neural networks because they can run many calculations in parallel, allowing faster, more efficient training of new models and leading to better accuracy and performance.

Jay said, “While we are currently focused on rolling out the RTX 4000 Ada GPUs, we're also constantly looking at the specialised processor space and how we can help by optimise systems for customers that have varying needs."

The NVIDIA RTX 4000 GPU’s inferencing performance is partly due the NVIDIA Ada Lovelace architecture. Its 192 fourth-generation Tensor Cores accelerate more data types and include a new Fine-Grained Structured Sparsity feature for extra throughput for tensor matrix operations when compared with the previous generation. Including the 20GB memory accommodates large models and datasets.

GPU cloud computing is also commonly used in data analysis and scientific computing because the nature of its tasks typically involve processing large amounts of data. Like inference, analysis and simulation are time-consuming, computationally intensive, and can be accelerated by parallel processing.

In short, nearly any high-performance computing applications that use modelling and simulation will need fast efficient data processing that can be accelerated by GPU cloud computing.

“In order to support a wide range of workloads, you need a wide array of compute instances,” Shawn Michels said. “What we’re doing with industry-optimised GPUs is one of many steps we’re taking for our customers to increase instance diversity across the entire continuum of compute to drive and power edge native applications.” www.akamai.com