AI Adapts Streaming to Content Complexity

Video consumers have grown to value choice and flexibility, nearly as much as the huge variety now available in the content itself. The number of competing on-demand and OTT services mean that many viewers can take anytime, anywhere, any-device viewing for granted. Conviva, analysing viewer data from 180 countries connected to the internet by approximately 1,200 ISPs, has published figures showing that in 2017, 12.6 billion hours of content were viewed using OTT services, roughly double that of the year before.

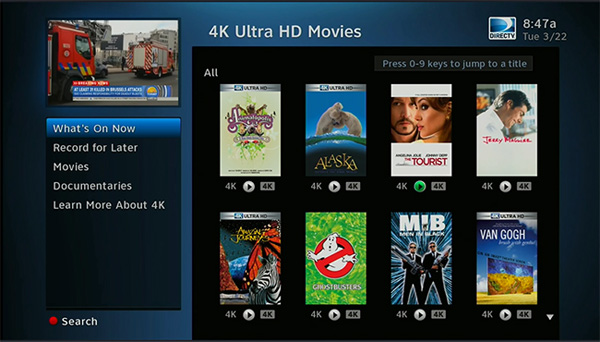

Whereas before, viewers grew accustomed to watching the only content on offer, now they can pick and choose. As a result, the competition to supply the most compelling movies and box-sets is fierce. Jérôme Vieron, PhD, Director of Research & Innovation for ATEME, believes that expectations of the quality of service represent a further differentiator. Speaking recently to Digital Media World, he said that those expectations have continued to rise due to various new formats including 4K/UHD, HDR and HFR, and are powering a continuous string of innovations, especially those related to the issue of streaming.

The Optimised Experience

One of the most recent developments concerns the use of artificial intelligence to analyse hundreds of thousands of assets before making playout recommendations, which typically include optimum video configurations such as resolution or encoding bitrate.

According to Jérôme, this innovation has been shown to save considerably on operators' content delivery costs, while also improving the quality of delivery. He said, “Video bitrate, for instance, impacts storage and delivery - in order to save costs on these factors, bitrate has to be reduced as far as acceptably possible. But many operators find that a ‘one size fits all’ streaming approach lowers the quality of the viewing experience, especially when bandwidths are low. In such a fast-moving competitive environment, they can’t afford this compromise.”

Adapting to Content

At first, adaptive streaming looked like the answer to the problem. Under this regime, the same media file is encoded at a number of different bitrates, producing multiple representations called profiles at different qualities. Then, as the quality of an internet connection varies, the stream can switch between different representations to generate a smoother viewing experience. The downside is that the bitrate doesn’t match the content complexity. For half the content, the bitrate will be higher than necessary and for the other half it will be too low, so the quality of content is never fully optimised.

“Recognising this shortfall, developers have been working on a type of streaming that adjusts the bitrates based on the complexity of content rather than just the internet connection,” Jérôme said. “The result is content adaptive streaming which uses AI to compute all the necessary information, such as motion estimation, to make intelligent allocation decisions. Using a variable bitrate to reach constant quality allows bits to be saved when the complexity drops on slow scenes, for example, also using lower quality profiles on simpler content.”

Chunking and Motion

The other difference between adaptive and content adaptive streaming is the chunking, a process that improves playback efficiency. The traditional approach is to keep chunks at fixed lengths, which may conflict with accurate playback of motion. In a motion sequence, individual frames are grouped together and played back in an arrangement that allows viewers to appreciate the video's spatial motion. Most playout systems require chunks to start with an I-frame – which is one of the three frame types used in compression - so that profile switches can occur between chunks.

The use of fixed-size chunks implies arbitrary I-frame placement. Therefore, should a scene-cut occur before a chunking point, a compression inefficiency results because the image will need to be encoded twice. Also, advertising segments are not typically aligned with a chunking period, leading to other inefficiencies when using fixed-size chunks. During dynamic advertising insertion, for example, the ad delivery system aligns chunks to ad borders and so is actually already using variable chunk sizes.

Because I-frames contain a larger number of bits than the other frame types, the more I-frames contained in the video, the better the quality will be. However they also take up more space on the storage medium.

Scene-Cut Detection

In contrast, content adaptive streaming side-steps some of these chunking issues by combining a scene-cut detection algorithm in the video encoder with rules to keep chunk size reasonable and minimise drift – variation in or fading of a signal due to detail - in order to prepare the asset for more efficient packaging. This not only saves on costs by reducing traffic, storage and other overheads, but also improves the quality of experience for the consumer.

Content adaptive streaming systems have been developed with interoperability within existing infrastructures in mind as well, so individual parameters such as dynamic chunking can be turned on and off. Operators have the option to use the specific resolutions they want, even if these appear to exceed or fall short of optimum values for the system. www.ateme.com

Words: Adriene Hurst, Editor