Adobe Firefly’s generative AI models, trained with NVIDIA for the generation of images and text effects, add precision, speed and ease of use to Creative Cloud and Adobe Express workflows.

Adobe Firefly is a new group of creative generative AI models focused on the generation of images and text effects intended to add precision, speed and ease of use to Creative Cloud, Document Cloud, Experience Cloud and Adobe Express workflows. Adobe Firefly will be part of a series of new Adobe Sensei generative AI services across Adobe’s clouds.

Generative AI models can respond to prompts by generating text, images or other media. They can also use large language machine learning models that can use natural language processing – that is, use language in ways familiar to humans like classifying text, answering questions and translating text from one language to another.

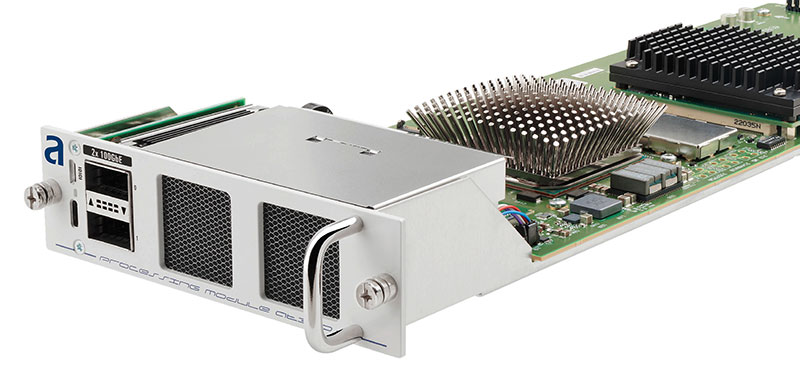

NVIDIA Partnership

Having announced a new partnership in support of generative AI in creative workflows. Adobe and NVIDIA will also co-develop generative AI models with a focus on deep integration into commonly used content creation applications. Following development, some of the joint models will be brought to market through Adobe’s Creative Cloud, as well as through the new NVIDIA Picasso cloud service for reach into the wider community of third-party developers.

With NVIDIA Picasso, users can build and deploy generative AI-powered image, video and 3D applications with text-to-image, text-to-video and text-to-3D capabilities to support digital simulation through simple cloud APIs.

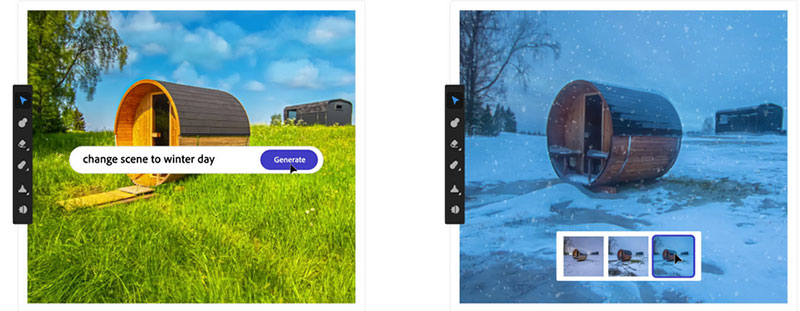

NVIDIA Picasso text-to-image image generation

Enterprises, software creators and service providers can run inference training on their models, train NVIDIA Edify foundation models on proprietary data, or start from pretrained models to generate image, video and 3D content from text prompts. The Picasso service is optimised for GPUs or for use in the NVIDIA DGX Cloud, an AI supercomputer accessible via a browser.

Over the past 10 years, Adobe has applied its Sensei AI engine to developing new features for the company’s software. Artists are now using features like Neural Filters in Photoshop, Content Aware Fill in After Effects, Attribution AI in Adobe Experience Platform and Liquid Mode in Acrobat to create, edit, measure, optimise and review content. These innovations are developed and deployed in alignment with Adobe’s AI ethics principles of accountability, responsibility and transparency. (More on this topic below.)

From Imagination to Words to Content

With Adobe Firefly, people creating content, regardless of their experience or skill, will be able to use their own words to generate content the way they imagine it, from images, audio, vectors, videos and 3D, to creative elements like brushes, colour gradients and video transformations. With Adobe Firefly, producing variations of content and making changes multiple times – all within the user’s definitions – will be quicker and simpler. Adobe will integrate Firefly directly into its tools and services, which means users can use generative AI within their existing workflows.

Adobe launched a beta for Firefly that shows how creators of all experience and skill levels can generate original images and text effects. On the understanding that the full potential of computer processing cannot be realised without imagination, Adobe will use the beta process to engage with the creative community and customers as it evolves this new system and begins integrating it into its applications. The first applications that Adobe Firefly integration will impact will be Adobe Express, Experience Manager, Photoshop and Illustrator.

Safe for Commercial Use

Priorities of the Adobe – NVIDIA partnership include supporting commercial viability of the new technology and ensuring content transparency and correct use of Content Credentials powered by Adobe’s Content Authenticity Initiative.

Adobe Firefly will be made up of multiple models, tailored to serve customers with diverse skillsets and technical backgrounds, working across a variety of different use cases. Adobe’s first model, trained on Adobe Stock images, openly licensed content and public domain content where copyright has expired, will focus on images and text effects and is designed to generate content safe for commercial use.

Adobe Stock’s hundreds of millions of professional-grade, licensed images are among the most carefully curated in the market and help ensure Adobe Firefly won’t generate content based on other people’s or other brands’ IP. Future Adobe Firefly models will leverage a variety of assets, technology and training data from Adobe and sources. As other models are implemented, Adobe says the company will continue to prioritise countering potential harmful bias.

Generative AI and Creators

Adobe is designing generative AI to help creators take better advantage of their skills and creativity and work faster, smarter and with greater convenience. It includes the ability for customers to train Adobe Firefly with their own collateral, generating content in their personal style or brand language.

Compensation. Adobe’s intent is to build generative AI with a potential for customers to monetise their talents, similar to what Adobe has done with Adobe Stock and Behance. Adobe is developing a compensation model for Adobe Stock contributors and will share details once Adobe Firefly is out of beta.

Open standards. Adobe founded the Content Authenticity Initiative (CAI) to create a global standard for trusted digital content attribution. With more than 900 members worldwide, Adobe is pushing for open industry standards using CAI’s open-source tools that are free and actively developed through the nonprofit Coalition for Content Provenance and Authenticity.

Through Content Credentials that CAI adds to content at the point of capture, creation, edit, or generation, people will have a way to see when content was generated or modified using generative AI. Adobe and NVIDIA support Content Credentials so people can make informed decisions about the content they encounter. (C2PA).

A universal ‘Do Not Train’ Content Credentials tag is available for creators to request that their content isn’t used to train models. The Content Credentials tag will remain associated with the content wherever it is used, published or stored. In addition, AI generated content will be tagged accordingly.

Adobe is planning to make Adobe Firefly available via APIs on various platforms to assist integation into custom workflows and automations. www.adobe.com