Ncam and disguise have formed a partnership that incorporates the Ncam Reality real-time camera tracking system into the disguise xR workflow for mixed reality projects. The partnership makes it possible to combine LED systems, real-time rendering and point cloud tracking for virtual productions. Ncam’s accuracy and ease of use can bring more flexibility to broadcast and live event producers who want to blend CGI, video and extended reality (XR) in engaging ways.

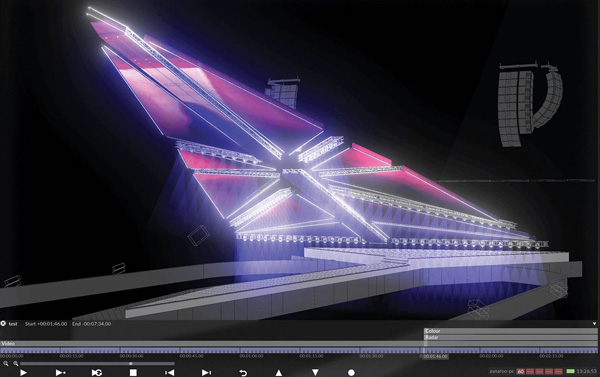

disguise developed xR as a workflow based on their own specialised servers and render system, and orchestrated by their software application called Designer. Other workflow components – render engines, 3D content creation software, camera tracking – come from 3rd party developers, which give clients a lot of control over the final production.

The disguise platform helps brands and performers use immersive storytelling in their campaigns and shows by introducing AR and MR elements into live production environments, in order to blend virtual and physical worlds together.

Using disguise Designer software

In-Camera in Real Time

The advantages of working in a virtual environment stem from the ability to work on an infinite canvas that extends the set beyond the stage. Extensions come from imagery projected on LED screens which are then captured in camera. Not only can you capture real lighting, reflections and shadows at the same time, but talent can also physically interact with the content.

Working with the camera tracking data, disguise servers precisely align the virtual and physical elements, bringing together the content and the LED screens. For complex productions users can synchronise multiple render engines from a single timeline, with built-in latency compensation.

Ncam Reality can be used to track in nearly any digital environment or elements, on any camera, with any lens or rig, so that it may be built into any type of production. This flexibility will also allow productions to quickly swap in virtual sets during remote shoots, reducing the amount of space, the shooting time and the number of staff needed onsite, stretching budgets further.

Ncam sensor bar, mounted on a camera.

Visualising Live

In a live context, disguise xR can directly contribute to productions based on LED walls and live real-time content, producing precise live imagery on huge, complex surfaces. By adopting Ncam Reality in the workflow, clients will be able to visualise live AR, MR and XR, real-time CGI environments, set extensions and CGI elements – directly in-camera. Using Ncam with xR is expected to make virtual filming systems accessible to a wider variety of clients who need trackers that aren’t limited to a single surface or location.

Instead of the user applying markers to the floor, ceiling or set, Ncam’s tracking technique identifies patterns and areas of contrast, and uses them to generate its own 3D tracking points and point clouds in real-time. It does this using a type of computer vision – that is, an ability to analyse and understand digital images of the environment gathered through the camera. In environments with changeable, flashing or strobe lighting, the system’s infra red mode is able to read IR markers instead.

Outputs

As a coherent set of 3D data, each point cloud that Ncam Reality calculates helps improve the accuracy of the system, which leads to more robust camera track. The system's other outputs include a real-time image of the monitor video, with the tracked and keyed CGI composited in, that is routed to the camera EVF or field monitor and to the video village monitors. A labelled .fbx file and lens profile data are automatically recorded for post-production, with camera position, rotation, focus, iris and zoom, and timecode plus the captured point cloud for each take.

Calibration is an element of both Ncam Reality and disguise xR. Ncam keeps track of six degrees of freedom – the XYZ position combined with three degrees of rotation. It also calibrates the camera’s lens data, achieved through a proprietary process, as well as zoom and focus, because lenses bend the image, curving straight lines existing in the real world. Ncam measures and calibrates the lens distortion occurring for any zoom, focus and aperture adjustments, allowing the graphics to precisely match the live action.

disguise’s spatial calibration system calibrates the tracking offset and lens data itself – its calibration works independently but relies on Ncam’s calibration having been completed first. A colour calibration process is also carried out, so that the world appears as one uninterrupted environment.

Partnership

Nic Hatch, CEO of Ncam, believes the COVID-19 pandemic has accelerated the transition to virtual graphics, leaving many companies wondering where to start. He said, “This partnership helps brands jump on a growing trend with trained experts and advanced tech, so they can set themselves up for a future that is only going to get more visual.”

The goal of disguise is to give customers more flexibility as they plan their productions. CCO Tom Rockhill said, “Ncam’s ability to use natural markers and IR reflectors gives clients more versatility, which means it can be used both indoors and outdoors, on an LED wall or for AR graphics in an open space. The possibilities are endless, and that is how we want our clients to feel.” www.ncam-tech.com www.disguise.one