MetaHuman Animator accurately records and transfers a full range of expressions, looks and emotions in a performance to a digital human, ready for use in Unreal Engine 5 projects.

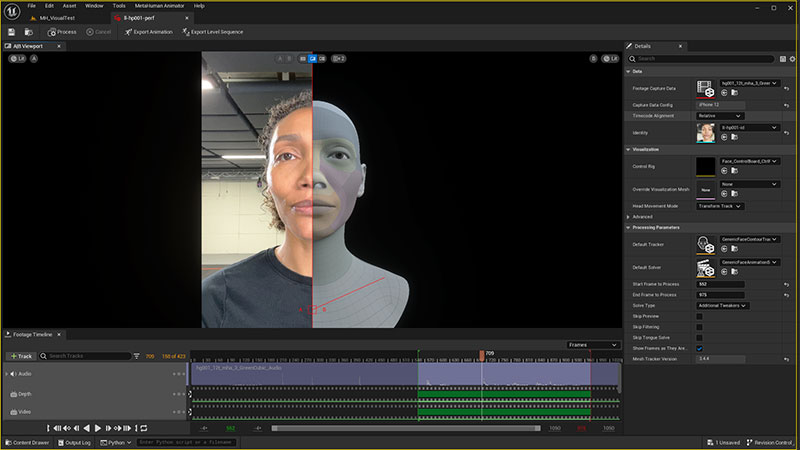

The new update to Epic Games’ MetaHuman digital human pipeline integrates facial performance capture, bringing a set of new features for animation. Users can capture an actor’s performance using an iPhone or stereo head-mounted camera system and apply the data as high-fidelity facial animation on a MetaHuman character, without relying on manual intervention.

First shown at GDC 2023, MetaHuman Animator is capable of accurately recording and transferring the full range of expressions, looks and emotions in a performance to digital humans. The technique is straightforward and relatively simple, and users do not require special skills to achieve effective results.

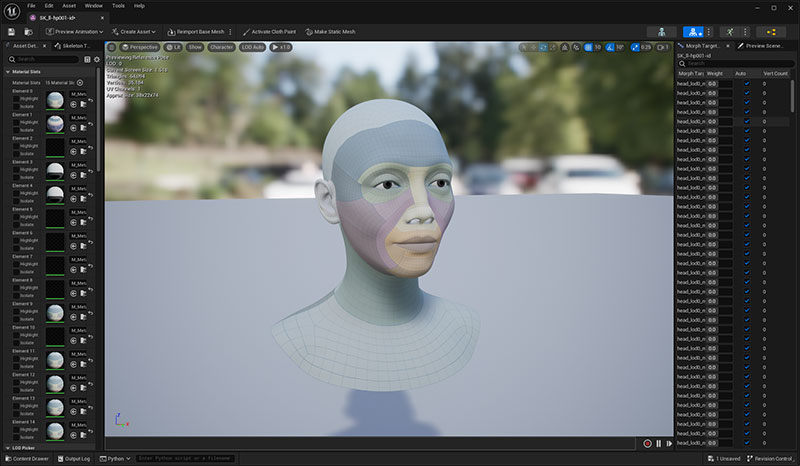

MetaHuman is Epic Games’ software structure and tools used to create and use fully rigged, photorealistic digital humans in Unreal Engine 5 projects. MetaHumans are created with MetaHuman Creator, a free tool that runs in the cloud and is streamed to a user’s browser using pixel streaming. From there, users can customise their digital avatar's hairstyle, facial features, height and body proportions

MetaHumans are downloaded into Unreal Engine 5 using the Quixel Bridge plugin, to use in projects.

Fast, Accurate Facial Animation

MetaHuman Animator now makes the process of applying real-world performances to facial animation more convenient for artists who are new to performance capture. Teams that already have a capture set-up can combine MetaHuman Animator with an existing vertical stereo head-mounted camera, and use the new features to improve their existing workflow, reduce time and effort, and gain more creative control.

Typically, it would take an animation team months to faithfully recreate every nuance of the actor’s performance on a digital character. Instead, MetaHuman Animator replaces this effort by using a 4D solver to combine video and depth data together with a MetaHuman representation of the performer. The animation is produced locally using GPU hardware, making the final animation available very quickly.

Those processes occur largely in the background, though, while users focus on the camera and the recording. Once captured, MetaHuman Animator accurately reproduces the fine, individual details of the actor’s performance onto the MetaHuman character.

The control curves of the animation data are semantically correct – appropriately positioned on the facial rig controls where an animator would expect them to be – making adjustments simpler. They are also temporally consistent, with smooth control transitions – any animation created will continue to work if changes are made to the MetaHuman later.

Personalising Facial Animation

Also, the facial animation you capture using MetaHuman Animator can be applied to other MetaHuman characters, or to any character adopting the new MetaHuman facial description standard, in a few clicks. This standard is a list of Control Curves that represent facial movements of a MetaHuman. By adhering to this standard, the artist has the basic representation of a MetaHuman facial animation that is stored when baking an AnimSequence from ControlRig, or exporting from MetaHuman Animator.

As a result, you can design a character as needed, and meanwhile be certain that the facial animation applied to it will work.

That is achievable because the Mesh to MetaHuman feature can now create a MetaHuman Identity asset from only three or more frames of video, along with depth data captured with an iPhone or reconstructed using data from a vertical stereo head-mounted camera. Mesh to Metahuman turns a custom scan, sculpt or model into a MetaHuman Identity, and is used when creating a MetaHuman from a 3D character mesh built in an external application. MetaHuman Animator builds on that ability, enabling MetaHuman Identities to be created from a small amount of captured footage.

This process personalises the solver to the actor, meaning that MetaHuman Animator can produce animation that works on any MetaHuman character, even using the audio to produce realistic tongue animation. MetaHuman Animator’s support for timecode as well allows the facial performance animation to be aligned with body motion capture and audio to deliver a full character performance.

iPhone to Unreal Workflow

MetaHuman Animator can be used with just an iPhone (12 or above) and a desktop PC due to an update to the Live Link Face iOS app to capture raw video and depth data, which is then ingested directly from the device into Unreal Engine for processing.

Using MetaHuman Animator with an existing vertical stereo head-mounted camera system achieves more accurate results. But, whether an iPhone or stereo HMC is used, MetaHuman Animator can improve the speed and ease of use of capture workflows. It gives users the flexibility to choose the hardware best suited to the requirements of the shoot, and the intended level of visual fidelity.

On-set Iteration

Owing to its speed and ability to process and transfer facial animation onto any MetaHuman character, MetaHuman Animator works well for creative iteration on set. For instance, it can be used to work through various emotions, or explore a new story direction, while reviewing the results within a few minutes.

With animation data reviewed directly in Unreal Engine while you’re on the shoot, the quality of the capture can be evaluated well in advance of the final character being animated.

And because reshoots can take place while the actor is still on stage, you can get the best take on the day, in the moment, instead of facing the cost and time needed to reassemble the cast and crew later on.

Custom Characters

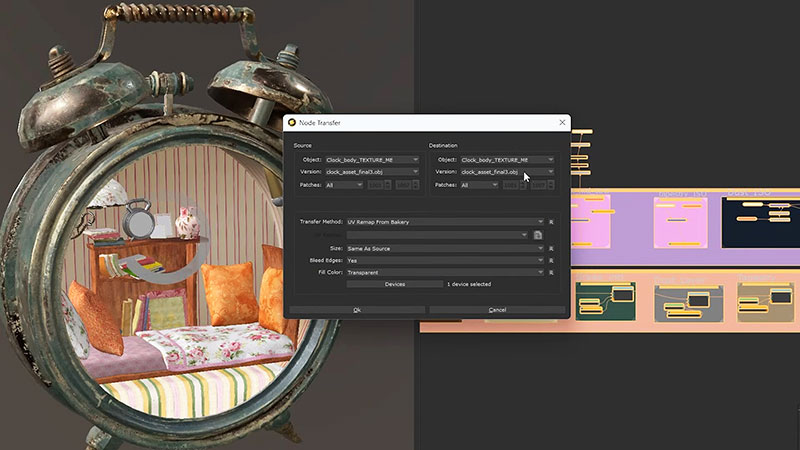

As well as introducing MetaHuman Animator, this release includes Mesh to MetaHuman, described above, so that users can now directly set the template mesh point positions.

Mesh to MetaHuman performs a fit that enables it to use any topology, but this can only approximate the volume of your input mesh. With this release, you can set the template mesh and, provided it strictly adheres to the MetaHuman topology, exactly that mesh will be rigged – not an approximation.

As well as being able to use DNACalib to set the neutral pose for mesh and joints, users have the tools needed to develop and work on custom characters.

MetaHuman Animator at 3Lateral

To show the potential of MetaHuman Animator when used on a professional film set, Epic Games' team at 3Lateral in Serbia created a short film titled ‘Blue Dot’ in collaboration with local artists. It features a well known actor Radivoje Bukvić, who delivers a monologue based on a poem by Mika Antic. The performance was recorded on a mocap stage at Take One studio with cinematographer Ivan Šijak serving as director of photography.

In this case, MetaHuman Animator was used with a stereo head-mounted camera system and traditional filmmaking techniques, with minimal interventions further to the MetaHuman Animator results.For a better understanding of the software, look at the MetaHuman hub located on the Epic Developer Community. www.epicgames.com