Faceware Studio Matches Animation to Live Performance in Real Time

Faceware Studio is a new software platform made to create life-like, expressive facial animation in real time.

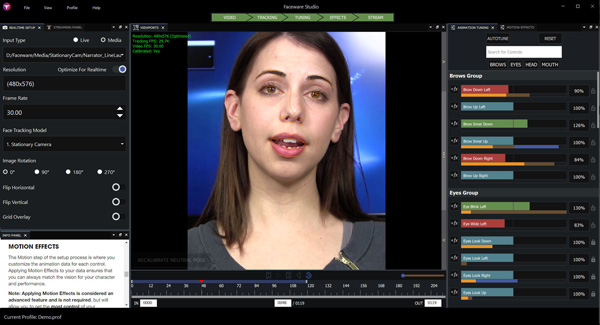

The Faceware Studio streaming workflow starts with single-click calibration, and can track and animate faces in real time using machine learning and neural network techniques. This approach is entirely different to Faceware’s earlier LIVE product, and completely replaces it. Artists are also given tools to tune and tailor the animation to an actor’s unique motions, and a Motion Effects system to gain further control over the final performance.

The data can then be streamed to Faceware-supported plugins in Unreal Engine, Unity and MotionBuilder for live streaming – or recording in-engine – on an avatar. Maya will be added to the software list later.

After first developing LIVE, Faceware Technologies said they decided to re-build their real-time facial animation software to better match the way people create and work with the real-time motion data. A machine learning approach was adopted to improve the quality and robustness of the tracking and animation, and give better results across more types of cameras and capture situations. For example, users can work with both live video and pre-recorded media.

Faceware’s neural network is able to recognise the actor’s face either from the media and develops a profile for that actor. The system’s Animation Tuning is used to visualise and adjust the profile. Because each person’s face will be different, Studio outputs feedback and supplies tools needed to tailor the animation to match the actor’s performance. The application has a customisable user interface with docking panels and saveable workspaces.

Motion Effects is a system of controls for the realtime data stream. The Effects are used to manipulate the data to perform exactly as required, and keep direct control over the final animation. You can also create custom Python effects. From there, you have the ability to animate character rigs by streaming the facial animation data to game engines or Motionbuilder where the rigs reside, and mapping Studio’s controls to your custom avatar.

Through this process, Studio makes it possible to produce interactive events, use virtual production and create large scale content.

Working with Faceware Studio starts with a real-time set-up. Via the Realtime Setup panel, you select the camera, image sequence or pre-recorded video to track, and then adjust frame rate, resolution, rotation and use of the Stationary or Professional Headcam model depending on your input. Calibration is done in the Tracking Viewport, where the media is displayed as you work - users can see their facial animation from any angle and use the Timeline and media controls to pause, play and scrub through media.

Coloured, moving graphic bars give instant feedback about the motion of each facial shape.

The Animation Tuning panel contains a list of each facial shape and its corresponding value as the face is being tracked. Coloured, moving graphic bars give instant feedback about the motion of each shape, with sliders to increase or decrease each one’s influence. The software’s jaw positioning is now the same as the technique Faceware developed for their Retargeter application, and can be used to create fast, accurate lip sync animation in realtime.

The new software is available now to try or buy from the company’s website. facewaretech.com