In September, Digital Domain announced that Masquerade 2.0, the next version of its in-house facial capture system, is now ready for Digital Domain to use in client projects as part of their asset creation process.

The software has been redesigned to make it practical to build and animate feature film-quality characters for games, TV programming and commercials. The same techniques and systems used to create Thanos in Avengers: Infinity War can now be applied to short form projects and bring the fine details and emotion of an actor’s performance to home and mobile screens.

Unlike methods that need long development stages to complete, Masquerade 2.0 can deliver a photorealistic 3D character and many hours of performance, in a few months. Using machine learning, Masquerade has now been trained to accurately capture expressive details without limiting an actor’s movement on-set. This freedom has helped Digital Domain develop emotive characters for projects over several years, including major Hollywood movies, real-time TED talks and TIME Studio’s recent recreation of Dr Martin Luther King Jr.

Problem-Solving Approach

Dr Doug Roble and Darren Hendler are the main developers in Digital Domain’s Digital Human Group and talked to Digital Media World about their team’s R&D over the past several years. Two years ago, they introduced Masquerade 1.0 as part of the company’s services. They found that traditional facial solvers were still only capable of a muted version of an actor’s face, and couldn’t overcome the core challenges of accuracy and completeness in motion data.

Doug and Darren took a problem-solving approach to the task of transferring a performance from a real person to a digital character or creature. ‘Avengers: Infinity War’ was a key project for the team during development, when their client Marvel was very keen to see actor Josh Brolin’s performance – exactly, specifically and in full detail – in their character Thanos. Their work to solve that problem resulted in Masquerade 1.0.

“We worked out a series of techniques to create Thanos and characters like him. First, a high-resolution scan of the actor’s face was captured while he sat and performed a full range of actions and expressions,” Darren said. “We recorded the motion data with a capture system – for Josh Brolin we used Disney Research Studios’ Medusa motion capture system – and from those moves, produced a 4D tracked facial performance.

Capturing in Context

“The data that coming out at that stage was very accurate but, because the actor himself was constrained, sitting alone without sets or other characters to interact with, we knew it wasn’t going to be his best performance. So we went one step further and recorded his performance on-set, while wearing a head-mounted camera (HMC) system and markers.”

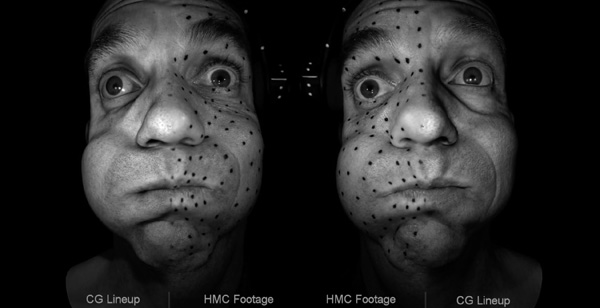

By the time Masquerade 1.0 was released, they had systems in place to combine the actor’s generic facial data, modified where necessary for consistency, the high-res scans, plus the HMC tracking data. ALL of this data was contributing to the final performance – the moving 4D version of the actor’s face. “At that point, we were clearly seeing much of the subtlety of his performance, and could transfer that data to the Thanos character and hand it off to the animators, effectively allowing the animation team to start at a much more advanced point in their character work,” said Darren.

However, human intervention was still an essential part of the final result. They still needed to tweak and adjust the model to avoid loss of intention of personality. Doug said, “When we are on set tracking a face in the HMC, the amount of data collected is relatively small. The markers are there for robustness – making sure that at least these points will be visible and anchored in the recording.

Motion vs Performance

“Markers are easy to capture, regardless of set lighting, an actor’s uncontrolled motion or myriad other variants on the set like explosions, water and fires. Those markers’ data is mapped into the very familiar space of the actor’s high-res facial scans, so that at all times and circumstances, you know where all the other points will be. From there, we can automatically derive the intermediate details – wrinkles, crow’s feet and so on – all tied to and exactly matching the performance at every moment.

“But in fact, only about 150 markers are used. How can we capture all the subtleties of a facial performance with only 150 markers, or any feasible number of markers, for that matter? That problem is what Masquerade 2.0 is overcoming. We have lots of data about how that person moves his face generically from the scan and seated recording exercise, but that is not the same as the performance of his character on set.”

Doug had already begun to recognise how, taking advantage of the robustness of the 150 markers, machine learning could become useful. Furthermore, their confidence in Masquerade 1.0 led to a new goal for version 2.0.

After recording the actor on-set, they wanted to be able to let custom machine learning processes take over and automatically recognise and figure out where the markers are, track them through space and produce an accurate high-fidelity moving mesh of that performance in every frame. They also wanted to achieve this while doing as little post work as possible and producing a result that is useful for more applications.

A Learning Curve

This goal is ambitious. To focus on it, the Digital Human Group has its own ML engine they are using to build custom machine learning applications in the PyTorch and TensorFlow programming languages, two open source Python libraries that use graphs to perform numerical computation on data. All machine learning programming software written into 2.0 is original and tailored to train the processes in Masquerade specifically for character automation.

Doug has been pursuing the potential of machine learning for this purpose for about seven years. “I’ve been pushing our developers to look at problems from a machine learning perspective,” he said. “The difference shows in 2.0 and how we talk about it. We take all of our high-resolution data as a start point and then learn from it HOW the face moves. That way, even with only 150 points we can predict very precisely what the face will look like at any moment, down to the same detail as the recording, all due to what we have learned through the ML process. The ability to learn makes it better than re-creation alone.”

Therefore the biggest changes in 2.0 aren’t accuracy or level of detail. What is and will continue to bring the automatic character pipeline concept closer to reality are these new machine learning techniques.

Automated Motivation

Darren differentiated between an automated process and a real time system. “Real-time is great. We love real-time, but our motivation here is different. We still want and need processing time, relying computers to organise the data into the highest quality possible. What we want to automate is the animation timing and ground work. Speed is important, but more than time, we want to save the animator’s work for the finessing and storytelling stage when they can really bring the character to life,” he said.

Masquerade 1.0 as it was used for characters in ‘Avengers: Infinity Wars’ and ‘Avengers: Endgame’ was good for character performances. But they now wanted to take that system apart and give it the ability to produce a working character, closely in line with the actor’s performance, without having to stop after each shot, assess what has been captured and adjust markers and HMCs. For 2.0 they demanded more automation.

A major factor prompting this development direction are the advances emerging in gaming. Games are featuring better characters, and now need better, more compelling performances to take advantage of them. Movies, on the other hand, have a limited amount of footage. A film producer has a single, linear story. Games have greater scope to use more, and more kinds of footage.

Inside Out

If you are not completely at ease with the idea of so much automation on film sets, recall that the actor is at the heart of what Doug and Darren are striving for. “An interesting process we went through with Thanos was to rehearse with Josh and the directors before the shoot, take that performance, transfer it to the character and show it to them,” Darren said.

“We could say, ‘This is what you are doing on set, and this is how it will look in the CG asset. This is the Thanos you have created – so far.’ It gives the actor a chance to modify and improve his work once the real shoot gets underway. In effect, he gets to know his character from the inside out and gains more control because he knows the subtleties of the model.”

Animators and Creativity

Another kind of human that Masquerade does not replace are animators. Just on its own, the software can produce an accurate, fully recognisable 4D version of a moving face automatically, and that might work for certain applications. But Masquerade’s digital performance includes a series of processes that break it down and allow an animator to refine it. Using a facial rig for the actor, the animator has animatable parameters to work with without losing any of the detail of the face, which is the most important factor.

“Even when you have built a facial rig, your rig may not be capable of all the shapes the actor’s face can achieve,” said Darren. “So, as an initial step, Masquerade will transfer as much of the performance onto the rig as will fit. Whatever is left over, we keep as a collection of extra layers that the animator can use to adjust and correct the final performance, losing nothing.

“This approach allows scope for creativity. We want the animator to have a complete and accurate record, but also a chance to adjust with total realism. That flexibility for creative realism is especially important for transferring performances to CG characters. The animator can focus on finessing for storytelling – beyond timing and beyond laying the groundwork. Masquerade’s data is also non-proprietary, so that it can go into any other software – Maya, Unreal Engine, Unity and so on. That makes it especially useful for game work, since some games run on their own custom game engine.”

On Being Real

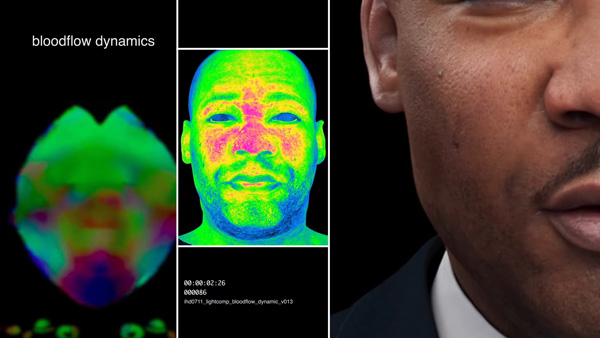

Doug reflected, “What makes someone look real? It’s a many-faceted question. One part of the answer is light and how surfaces reflect light back to the viewer. Another persistent challenge is controlling a character’s eyes. But still one of the hardest characteristics to grasp is how that person moves, overall and from moment to moment.

“Everything else may be perfect in terms of looks – skin texture, lighting, the fine detail. But often, spoiling the brilliant realism is the motion, distracting the viewer from everything that is good. Those giveaways, telling you that what you are watching is CG, are what motivated all the work from the start, leading to Masquerade. We had always felt it could be so much better.” www.digitaldomain.com